12 February 2019

How I created integration tests in a microservice architecture with Docker platform

Creating integration tests wouldn’t be possible without microservices “revolution”. For years, we’ve been developing apps using good old monolith architecture. Everything was in one place, bound to each other, and could be tested by a single request.

Then SOA and microservices happened and things have changed. We started to build systems consisting of many small pieces, each exposing its own interface to communicate with, but also having its own external dependencies (for example databases).

Those systems require a little bit more than running a single “do tests” command because everything needs to be executed in exact order before you run your tests. So, in order to make it easier we decided to make friends with Docker platform and trust me, it was worth it!

What is Docker?

Docker platform is designed for developers and administrators to create, deploy and run distributed applications. What started as an open-source project, now it is widely popular among IT community and business. This tool helps optimising apps for clouds: you can create, implement, copy and paste independent containers (light software packages) from one environment to another.

Containerisation allows running application processes in separate, isolated containers that have their own memory, the network interface with a private IP address and disc space where the operating system image and libraries are installed. When the application is ready, the developer packs it up onto a container with all necessary parts and ships it all out as a single package.

Enough theory, let’s see how Docker platform works and how it can be useful for your developers.

Interested in developing microservices? 🤔

Make sure to check out our State of Microservices 2020 report – based on opinions of 650+ microservice experts!

Docker platform to the rescue

Instead of setting up our services manually, you can use something called docker-compose. This tool allows you to compose your services into a fully working, complex app.

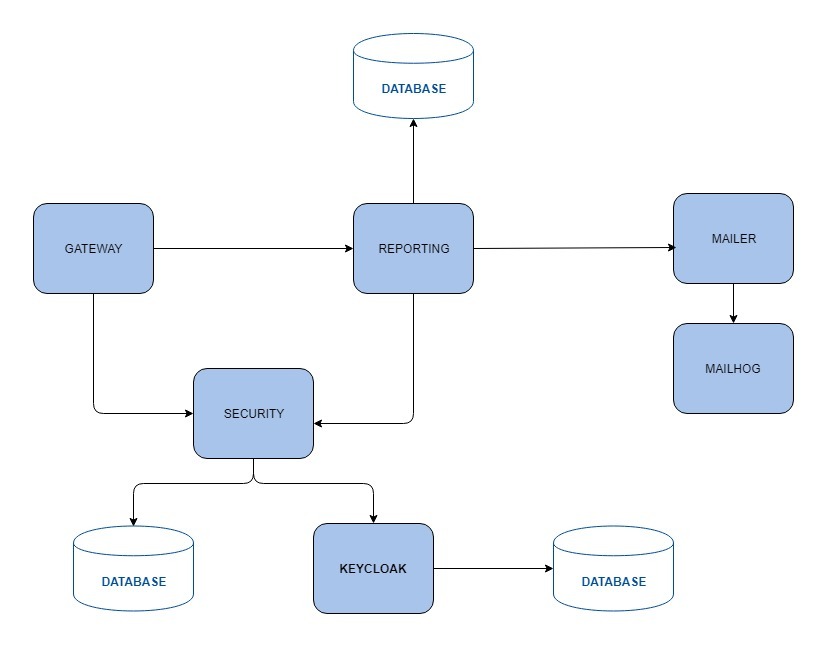

Let’s assume we have a simple system, consisting of four services, two databases and some external services, like Mailhog for testing email sending or Keycloak for handling authentication and authorisation.

Gateway handles communication with our system from the outside. Reporting is responsible for creating reports based on available data. It uses Security to know what information a given user has access to, and later, shares that information so it needs to be connected with Mailer service. In this example, we use MailHog for Mailer testing. The Security system is using Keycloak for authentication and handling users data, but when it comes to ACL, we decided to create our custom solutions and so we need a DB.

So where do we start? First of all, we have to create a docker-compose configuration. A very basic one could look like the one below.

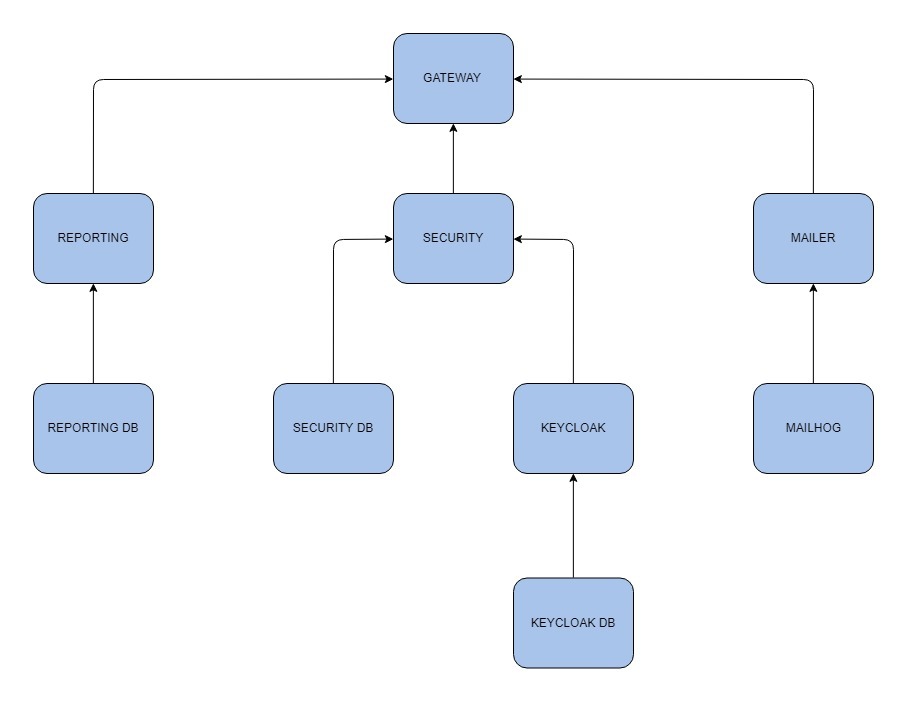

The key here is depend_on section. By using it, we’re telling docker to wait until all of the container dependencies are ready. We could compare it to traversing a tree structure, where containers with dependencies are nodes and those without are leaves. We’re going to start executing with leaves and slowly move up.

So, in order to make sure all the necessary services are up and running during our tests, we just need to “contenerise” them and define their dependencies like so:

But does it solve all of our problems? Unfortunately, when something is too good to be true, there is always a catch.

See also: Wondering how to reduce deployment time? Here are a few ways 👇

Here comes the edge case

We’ve come across a strange case – even though our integration tests had their dependencies configured properly, we still had some false negative situations. For an unknown reason, some of the services were not ready. How’s that even possible? Haven’t I just said that docker platform should handle this for us?

The “depends_on” option informs docker that service is ready, but it doesn’t necessarily mean that underlying service (for example, a complex service startup script or HTTP server) is ready to handle communication. In order to do it, we need to delay tests command until all required services (not containers) are up. For example by using “wait for” script.

Let’s improve our integration tests container, assuming Keycloak is the one to blame:

We added a special script before our npm run integration-tests command. This script is going to wait until port 8080 is open on keycloak container. This change should be enough for our tests container to be useful.

It’s all about test control

At this point, everything worked perfectly. But what if we could control our containers during tests runtime and check how our system behaves when something unexpected happens (for example, when our mailing system is down)?

There are tools like Netflix Simian Army to handle something similar. Their job is to randomly disable production instances to check if others would jump in and handle the traffic. I wouldn’t say it’s a testing tool, but a rather supervising tool.

On the other hand, we would like to have full control over our containers from the code level. The problem is that you don’t really have access to host docker from the inside of the container itself. So how can we do this? The answer is simple: pass docker socket into the container! Say what?

Let’s modify our test container one more time:

As you can see, we’ve added a single volumen to our container. By doing this, we can access all host containers from the inside of tests container and control them. Of course, in order to run docker commands, you need an additional tool. At TSH we’ve decided on using shelljs. Of course, you’re free to pick your own methods!

See also: What is test automation, maintaining it and what are the advantages? More about it below 👇

What’s next? Try Docker platform yourself!

If you’re building a system based on any kind of distributed architecture, integration tests are A MUST. Even though your services might work in isolation, it’s crucial to check if they work together.

What’s more, sometimes testing so-called “positive paths” is not a solution. I would rather know how my system behaves when something goes wrong than be surprised by it. I am sure you can agree with this statement.

See also: What are Jest snapshot tests, advantages and disadvantages on a real example 👇

Docker platform is getting widely popular, and the IT community is getting pretty crazy about it. Not only it speeds up the deployment process, but also reduces costs, boosts productivity, and reduces problems with application dependencies. Docker platform and Docker Compose are something you should definitely try out yourself and recommend it to your developers!