11 July 2023

How to scale WebSocket – horizontal scaling with WebSocket tutorial

As a developer, you probably know the difference between vertical and horizontal scaling. But if you don’t have much experience with the WebSocket protocol, you might not realize that doing horizontal scaling for it is not nearly as straightforward as with a typical REST API. In this tutorial, we learn how to scale horizontally WebSocket servers on easy practical examples. Let’s talk scaling WebSockets.

The WebSocket protocol has been around since 2008, but it continues to gain ground in 2023 with new improvements and support from companies such as Mozilla. Its potential for improving speed and real-time capability with active connections and bidirectional communication, while reducing traffic, are well-known. However, less experienced developers may find it difficult to scale WebSocket.

What you’ll learn

You’re already asking why? Don’t worry, that’s the first thing you’re going to find out in this article, along with:

- the difference between horizontal and vertical scaling using WebSockets,

- solving a problem with state in WebSocket by using sticky sessions,

- mastering WebSocket broadcasting by using the Pub/Sub service.

OK, so what’s exactly the problem with scaling WebSockets?

Vertical vs horizontal scaling – what’s the difference

When we start thinking about the development of an application, we usually first focus on an MVP and the most crucial features. It’s fine, as long as we are aware that at some point we will need to focus on scalability. For most of the REST APIs, it’s rather easy. However, it’s a whole different story when it comes to WebSockets.

We all know what scaling is, but do we know that there are two types of scaling – horizontal and vertical scaling?

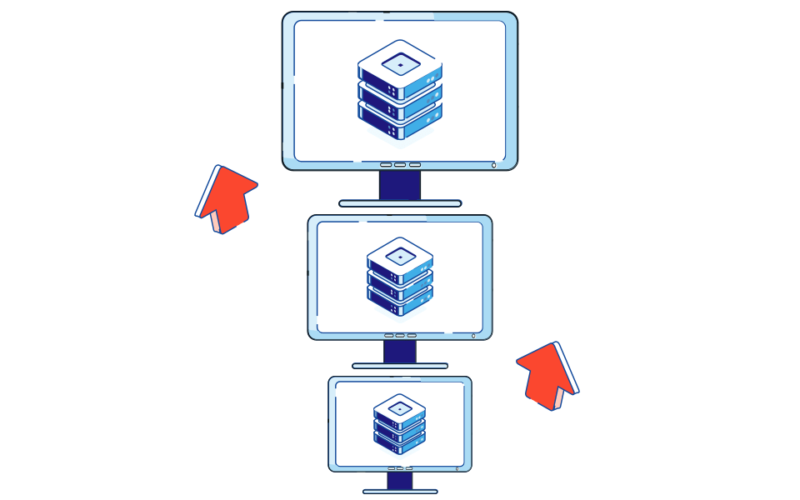

The first one is the vertical scaling. It is by far the easiest way to scale your app, but at the same time, it has its limitations.

Vertical scaling is all about resources, adding more power by adding more machines. We’re going to keep a single instance of our application and just improve hardware – better CPU, more memory, faster IO etc. Vertical scalability doesn’t require any additional work and, at the same time, it isn’t the most effective scaling option.

First of all, our code execution time is not changing linearly with the improved hardware. What’s more, we’re limited by possible hardware improvements – obviously, we cannot improve our CPU speed infinitely.

So, what about an alternative?

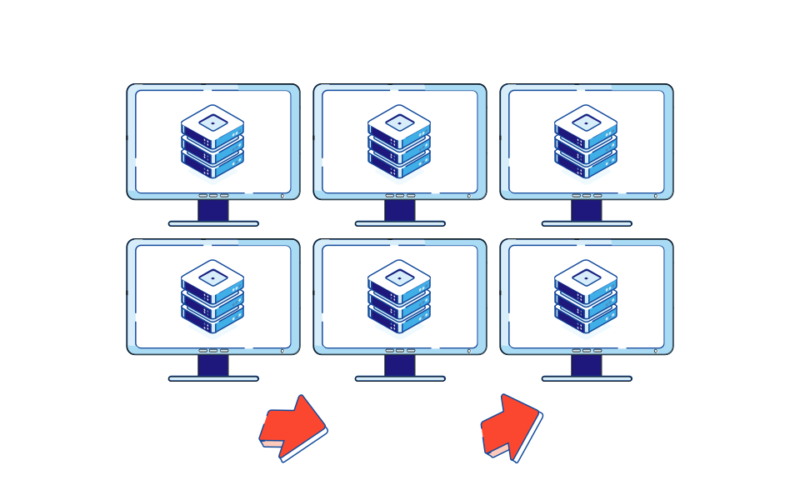

Instead of adding more resources to existing instances, we might think about creating additional ones. This is called horizontal scaling.

The horizontal scalability approach allows us to scale almost infinitely. Nowadays it’s even possible to have dynamic scaling – instances are being added and removed depending on a current load. It’s partly thanks to the trend of scaling in cloud computing.

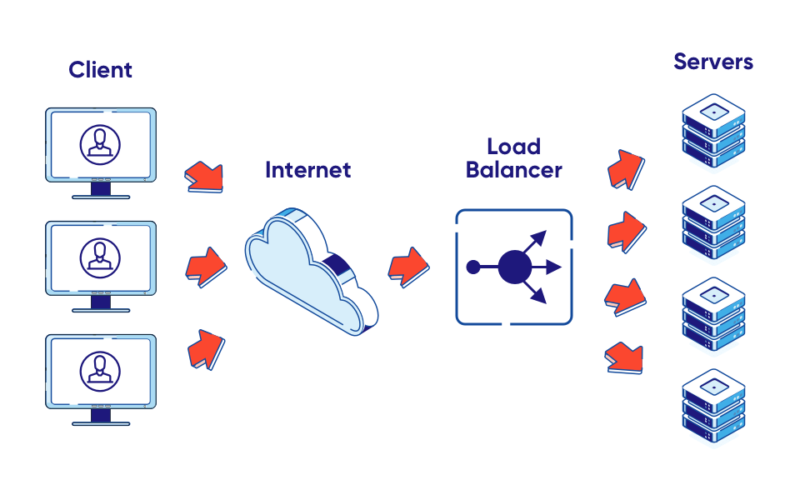

On the other hand, it requires a little bit more configuration, since you need at least one additional piece – load balancer, something responsible for request distribution to a specific instance – and for some systems we need to introduce additional services, for example messaging.

Horizontal scaling with WebSocket Issue #1: State

OK, let’s say we have two simple apps.

One is a simple REST API:

The other one is a simple WebSocket API:

Even though both APIs use a different way to communicate, the code base is fairly similar. What’s more, there will be no difference between those two when it comes to vertical scaling.

The problem arises with horizontal scaling.

In order to be able to handle multiple instances, we need to introduce a load balancer. It is a special service responsible for even (using selected strategy) distribution of traffic between instances. In other words, the load balancer redirects traffic to the available server if a given server goes down. Each time a new WebSocket server shows up, the load balancer is there to begin even traffic distribution.

HAProxy is an example of a load balancer. All we need is to provide a simple configuration.

As you can see, we’re defining frontend and backends.

The frontend will be public (this is the address used for communication with our backends).

We need to specify an address for it and also the name of the backend that will be used for it.

After that, we need to define our backends. In our case, we have two of them, both using the same IP, but different ports.

By default, HAProxy is using a round-robin strategy – each request is forwarded to the next backend on the list and then we iterate from the start.

We also configure so-called health checks, so we make sure that the requests won’t be forwarded to an inactive backend.

So where is the problem?

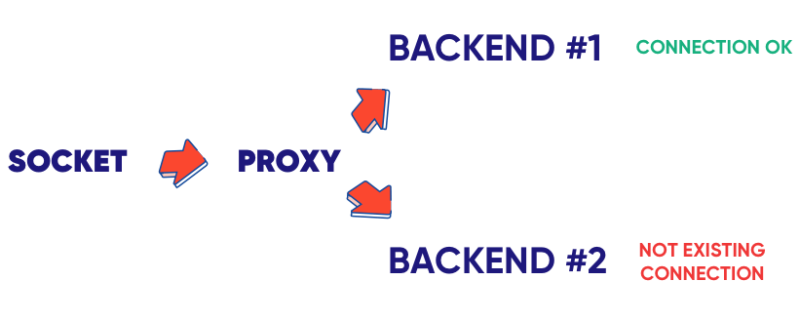

Most REST APIs are stateless. It means that nothing related to a single user making a request is saved on an instance itself. The thing is, it is not the same case with WebSockets.

Each socket connection is bound to a specific instance, so we need to make sure that all the requests from specific users are forwarded to a particular backend.

How to fix it?

The solution: sticky sessions

What we are looking for is a sticky session (sticky connection). Thankfully, we are using HAProxy, so the only thing that needs to be done is some configuration tweaks.

First of all, we’ve changed the balancing strategy. Instead of using round-robin, we decided to go with leastconn. This will make sure that a new user is connected to the instance with the lowest overall number of connections.

The second change is to sign every request from a single user with a cookie. It will contain the name of the backend to be used.

After that, the only thing that is left is to tell which backend should be used for a given cookie value.

At this point we should be fine with handling the single user’s messages. But what about broadcasting?

🧮 Try this test to determine your company’s observability mastery

Scaling WebSocket is one of many things you can do when you get observability under control. You can also lower cloud costs and improve any metric such as lead time and time-to-market. It takes 5-10 minutes. No registration.

Horizontal scaling with WebSocket Issue #2: Broadcasting

Onto WebSocket connections. Let’s start with adding a new function to our WebSocket server, so we can send a message to all the clients that have a WebSocket connection at once.

It looks fine. So where is the catch?

The WebSocket Server knows only about clients connected to this specific instance. This means we’re sending a message from the same server only to a set of connected clients, not all of them.

The solution: Pub/Sub

The easiest option is to introduce communication between different instances. For example, all of them could be subscribed to a specific channel and handle upcoming messages.

This is what we call publish-subscriber or pub/sub. There are many ready-to-go solutions, like Redis, Kafka, or Nats.

Let’s start with the channel subscription method.

- First of all, we need to separate clients for the subscriber and the publisher. That’s because the client in the subscriber mode is allowed to perform only commands related to the subscription, so we cannot use the publish command on that client.

- However, we can use a duplicate method to create a copy of a specific Redis client.

- After that, we subscribe to a message event. By doing this, we will get any message published on Redis. Of course, we’re also getting information about the channel it was published on.

- The last step is to run a publish method to send a message to a specific channel.

And now, onto the last part. Let’s connect it with our WebSocket code:

As you can see, instead of sending messages to the WebSocket client right now, we’re publishing them on a channel and then handle them separately.

By doing this, we’re sure that the message is published to every instance and then sent to users.

Want to know even more about WebSocket? Check out our expert practical WebSocket content

How to scale WebSocket – single server & multi server

Whether we’re talking a single web server or multiple WebSocket service servers, WebSocket scaling is not a trivial task.

- You cannot just increase the number of WebSocket server instances, because it won’t work right away.

- However, with the help of a few tools, we are able to build a fully scalable architecture with multiple server processes.

- All we need is a load balancer (such as HAProxy or even Nginx) configured with a sticky-session and messaging system.

Do you feel like you’re now ready to make your WebSocket-based web app more scalable using the horizontal scaling approach?

Interested in developing microservices? 🤔

Make sure to check out our State of Microservices 2020 report – based on opinions of 650+ microservice experts!