01 March 2023

OWASP Top 10 Privacy Risks – use these best practices to protect your clients

Data Privacy is a topic that few want to talk about when there is no problem at the moment. Luckily, the OWASP Top 10 Privacy Risks 2021 ranking gives you a ready-made list of privacy issues in your app. To make it even better, we summed it up for you in the form of an actionable guide so you can save even more time. Meet the threats, avoid them, and prevent your users’ anger, PR disasters, lawsuits, and a big headache in the process!

OWASP security rankings such as…

- OWASP Top 10 API,

- OWASP Top 10 web application vulnerabilities,

- or OWASP Top 10 mobile

bring tons of valuable up-to-date insights on how to protect your software from security threats.

What is the OWASP Top 10 Privacy Risks ranking?

The OWASP Top 10 Privacy Risks 2021 is less well-known than the rankings I mentioned before but just as important and useful. The privacy issues it lists include both technological and organizational aspects of web applications. It focuses on real threats rather than just legal matters.

Naturally, it also provides advice on how to prevent or overcome these threats based on the OECD Privacy Guidelines. As such, you can use it for data privacy risk assessment in web development.

Top 10 Privacy Risks 2014

The original ranking hit the digital shelves in 2014, but so many new tools and techniques have shown up ever since. The General Data Protection Regulation act also played a part in making the original ranking somewhat obsolete.

Just for a reminder, the threats were as follows:

- Web Application Vulnerabilities

- Operator-sided Data Leakage

- Insufficient Data Breach Response

- Insufficient Deletion of User Data

- Non-Transparent Policies, Terms, and Conditions

- Collection of Data Not Required for the User-Consented Purpose

- Sharing of data with third party

- Outdated personal data

- Missing of Insufficient Session Expiration

- Insecure Data Transfer

How did the list change over all of those years? Let’s not waste any more time and delve right into it!

#1 Web Application Vulnerabilities

⏱️ Frequency: High,

🤜 Impact: Very high,

🔎 Type: Organizational

This privacy risk is a clear shout-out to the OWASP Top 10 web app vulnerabilities list. It’s all about how you design your web app and whether you are able to respond immediately to any possible issues that may result in data privacy breaches.

In order to do that, you need to:

- perform penetration tests,

- do regular vulnerability and privacy checkups with tools such as Static Application Security Testing (SAST), Interactive Application Security Testing (IAST), or Dynamic Application Security Testing (DAST),

- implement appropriate counter-measures,

- educate programmers and architects on web development security,

- introduce safe processes such as Security Development Lifecycle (SDL) and DevSecOps,

- patch up your app according to the needs and industry best practices.

How do companies implement it in practice?

TSH response

At The Software House, we do the following:

- For each interested client, we carry out a vulnerability assessment procedure. With that, we can catch a lot of vulnerabilities before the app is even released.

- In addition to that, we form up a security team that consists of DevOps, QA, and security specialists as well as a CTO. It makes communication on analysis and debugging easier.

- Finally, we developed company-wide standards based on our own experience from numerous big commercial projects. It included a list of processes to be implemented in case of finding vulnerabilities and a pre-deploy security checklist. Shortened versions of both of these documents are available to all team members. The security team handles specialized information, audits, and consultations.

Let’s go to more particular vulnerabilities.

#2 Operator-sided Data Leakage

⏱️ Frequency: High,

🤜 Impact: Very high,

🔎 Type: Organizational / Technical

This one is all about preventing leakages of any user data the loss of which would constitute a breach of confidentiality. Sometimes it is caused by an actual cyber attack, but it is also often the result of errors, including botched access control management, improper data storage, or data duplication.

The best way to prevent all this is to be picky about your data operators. Before you entrust them with your data, you might want to:

- check their history of data breaches as well as their data privacy best practices,

- Find out whether they participate in a bug bounty program (rewards users for finding bugs),

- make sure that their country of origin upholds higher data security standards,

- enquire about their information security certificates such as ISO/IEC 27001/2, ISO/IEC 27017/18, or ISO/IEC 27701,

- perform an audit to find out more about how they handle data (i.e. if they have a dedicated data privacy team, security training, how they anonymize data, handle access management, encryption keys).

You should also remember at any time about:

- data authentication (including multi-factor authentication), authorization, and access management,

- the principle of least privilege – only give access to what’s needed for a user to perform a task,

- privacy by design,

- using strong encryption for all of your user data (including so-called data at rest), especially for portable data storage devices,

- implementing best practices such as proper training, monitoring, personal data anonymization or pseudo anonymization,

- introducing data classification and information management policy.

How does it work in practice?

TSH response

At my company, all of these best practices are woven into the development process. We implement them through audits, vulnerability assessments, or consultations with the team. Over time, we developed a transferable data security best practices set, but we also modified it to meet the specific needs of some particular clients. Security training is also a big part of our onboarding. Each new employee gets to learn about data processing, multi-factor authentication, password managers such as 1Password, phishing prevention measures, the importance of doing backups, and more.

Other than that, we use the services of companies that care about data privacy and adhere to GDPR, ISO, etc. That includes AWS for hosting and cloud computing, or Firebase for app development.

#3 Insufficient Data Breach Response

⏱️ Frequency: High,

🤜 Impact: Very high,

🔎 Type: Organizational / Technical

If you don’t inform the victims properly about sensitive data exposure, you have an insufficient data breach response. Failing to act on these security risks may not only result in losing clients but also in very costly lawsuits and financial penalties. One Polish online retailer was made painfully aware of this when Poland’s Personal Data Protection Office (UODO) punished it with a fine of €645,000 for a data breach that affected roughly around 2.2 million customers.

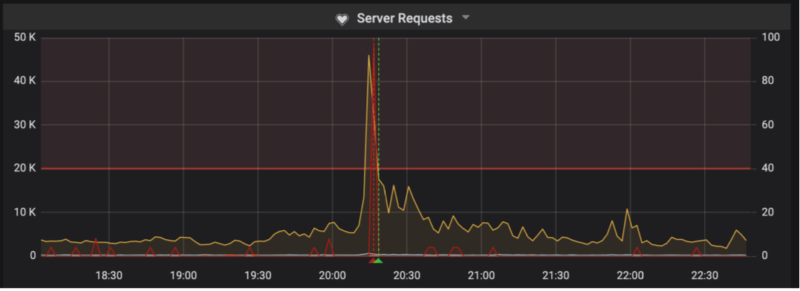

First of all, you need a data breach response plan. You also need to test it and update it regularly. The plan needs to be backed by a team and incident monitoring software (Security Information and Event Management or SIEM). For each event that took place in the past, you need to verify if the response was sufficient, timely, precise, and easy to understand for all the relevant parties.

TSH response

As per our recommendations, a good data branch response team or Computer Emergency Response Team (CERT) is the key. Then, it is all about monitoring. Once a breach occurs, the response team assembles to verify the vulnerability and decide if and how someone should be informed about it. Each incident is assigned a priority and the response is carried out accordingly.

We have our own procedures for all of these actions, but we also align them with the best practices of our clients.

#4 Consent on Everything

⏱️ Frequency: Very High,

🤜 Impact: Very high,

🔎 Type: Organizational

A misuse of consent for the processing of personal data is getting increasingly common.

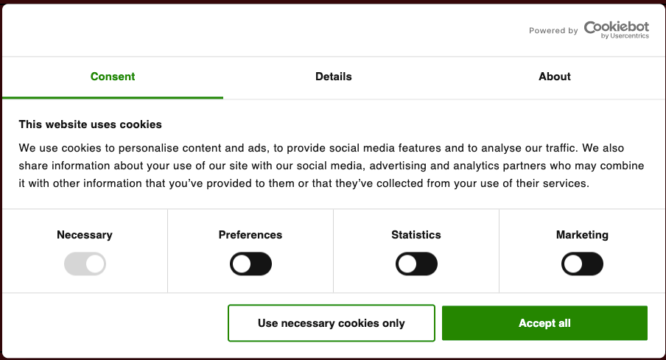

Oftentimes, companies ask for content for “everything”, rather than for each and every goal separately (e.g. using the website and profiling for ad purposes are two different areas). You must have seen those cookie pop-ups that tell you something about using cookies and pretty much nothing else. The user should be able to consent and withdraw consent for various actions. They should albo be able to remove unnecessary data.

TSH response

In order to prevent this, you need to:

- check if the consents are aggregated and processed properly,

- check if consents for non-essential actions and data are switched off by default,

- collect consents for each goal separately.

#5 Non-Transparent Policies, Terms, and Conditions

⏱️ Frequency: Very High,

🤜 Impact: High,

🔎 Type: Organizational

This one is clearly related to the previous one. It’s about unclear information on how user data is gathered, stored, and processed.

This is a very interesting problem with some very surprising and eye-opening viewpoints. For example, in an interesting article titled “Stop Thinking About Consent: It isn’t possible and it isn’t right”, digital privacy philosopher Helen Nissenbaum argues that organizations are not able to produce a fully transparent consent notice because, in the dynamic world of new technologies, they simply cannot establish with certainty how exactly the gathered data will be used. What’s more, consenting to data processing is often necessary to use a given website. She believes that in the topic of privacy, there is something called “an appropriate flow of information”. In other words, we should limit privacy requirements by default to the extent it’s needed to facilitate communication between two parties.

What are some things you can do?

From the service provider’s standpoint, you should definitely verify if your terms of use, policies and other documents are easy to find and understand. The Keep It Short and Sweet or KISS principle is useful here – it should not be any longer than it has to be.

In addition to that:

- the language should be clear and the data processing documents should include information on data retention time, metadata, user rights as well as where and when the data is processed,

- graphics can make it easier for users to understand the content,

- a good practice is to list all the cookies and what they do,

- dating policy updates could make it easier for users to stay in the loop,

- you should also keep track of which users consent to which policy versions, informing those users about new updates,

- and an opt-out button for all the cookies should be readily available and clearly visible.

Do we have some methods of our own for that?

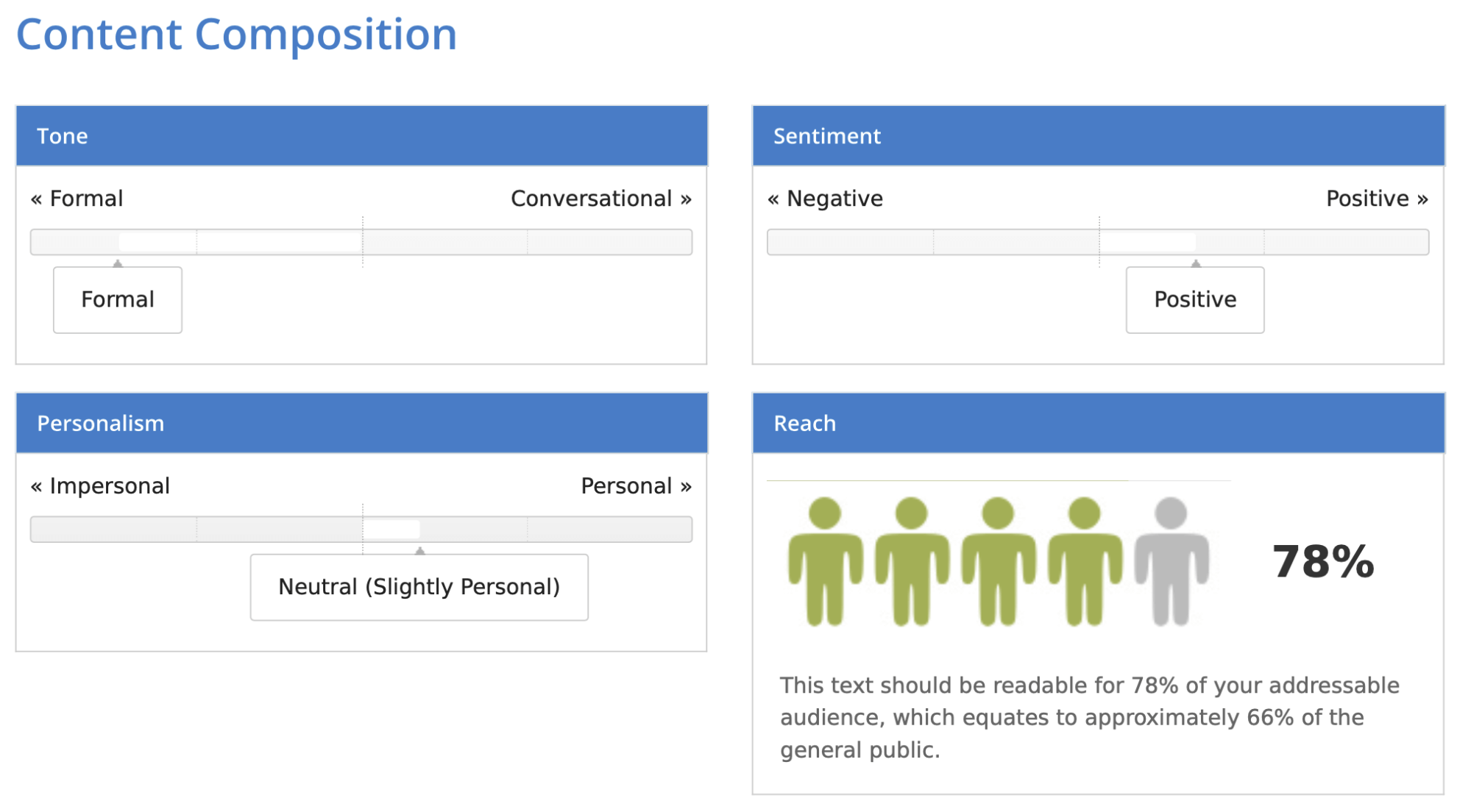

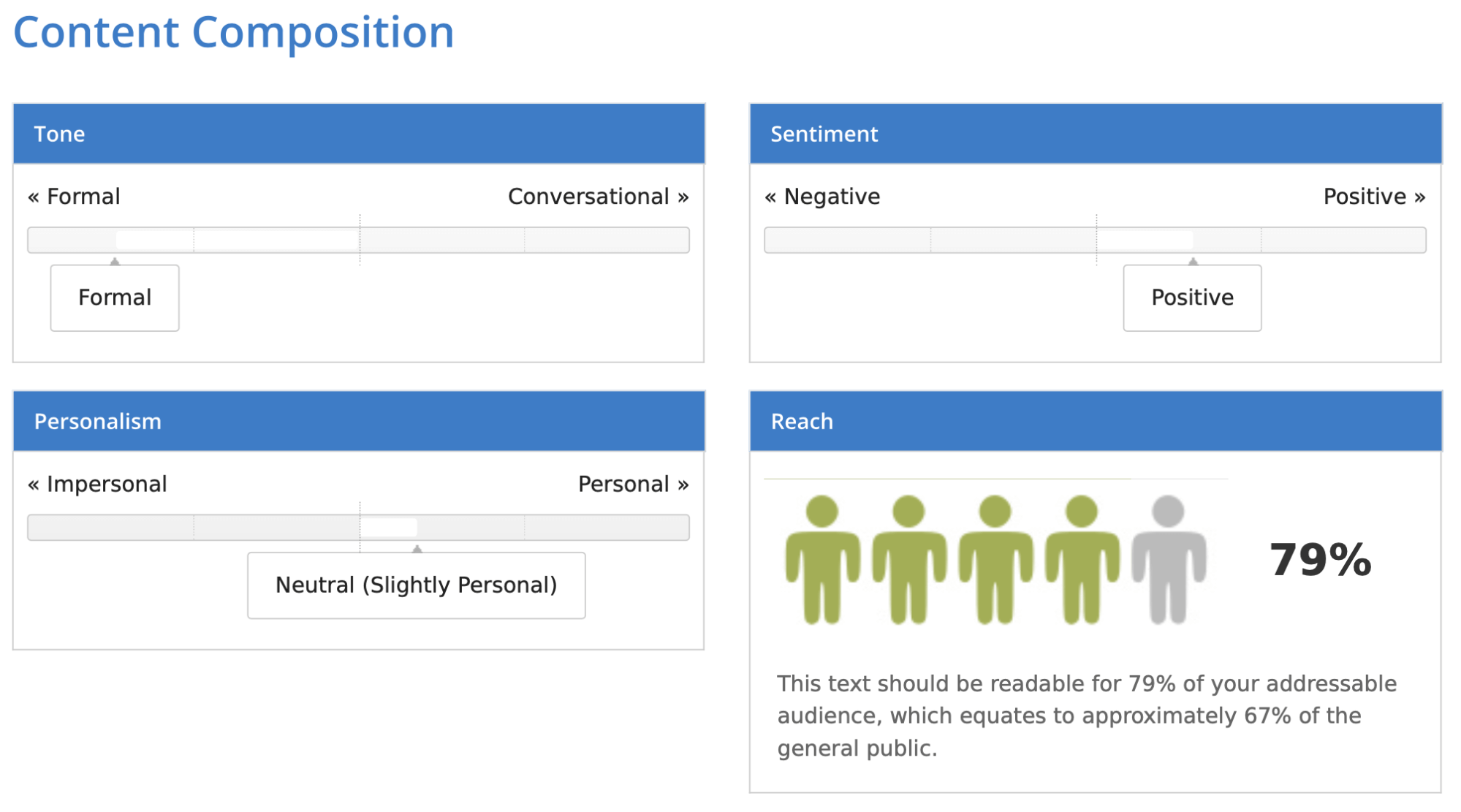

TSH response – the readability score

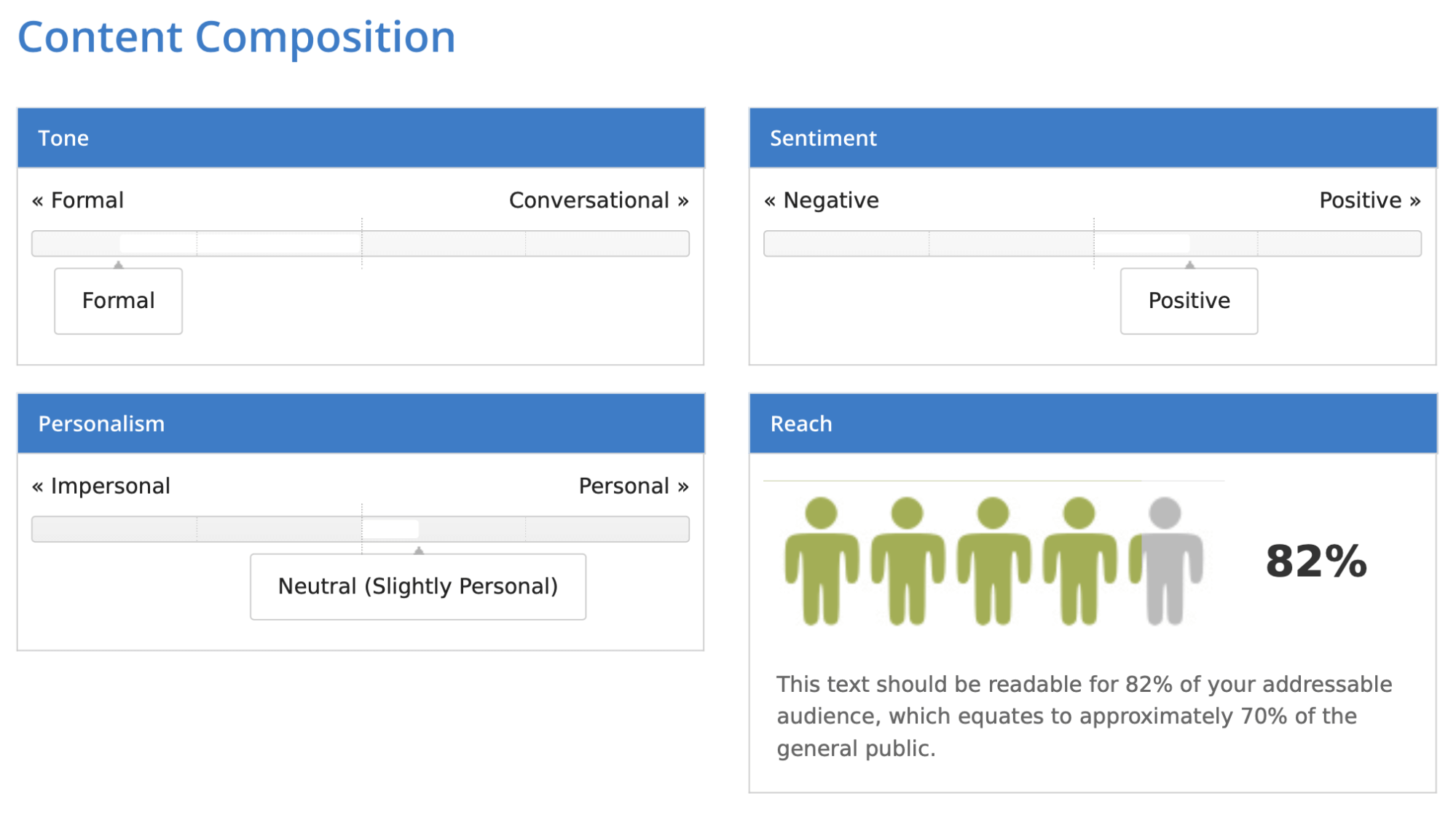

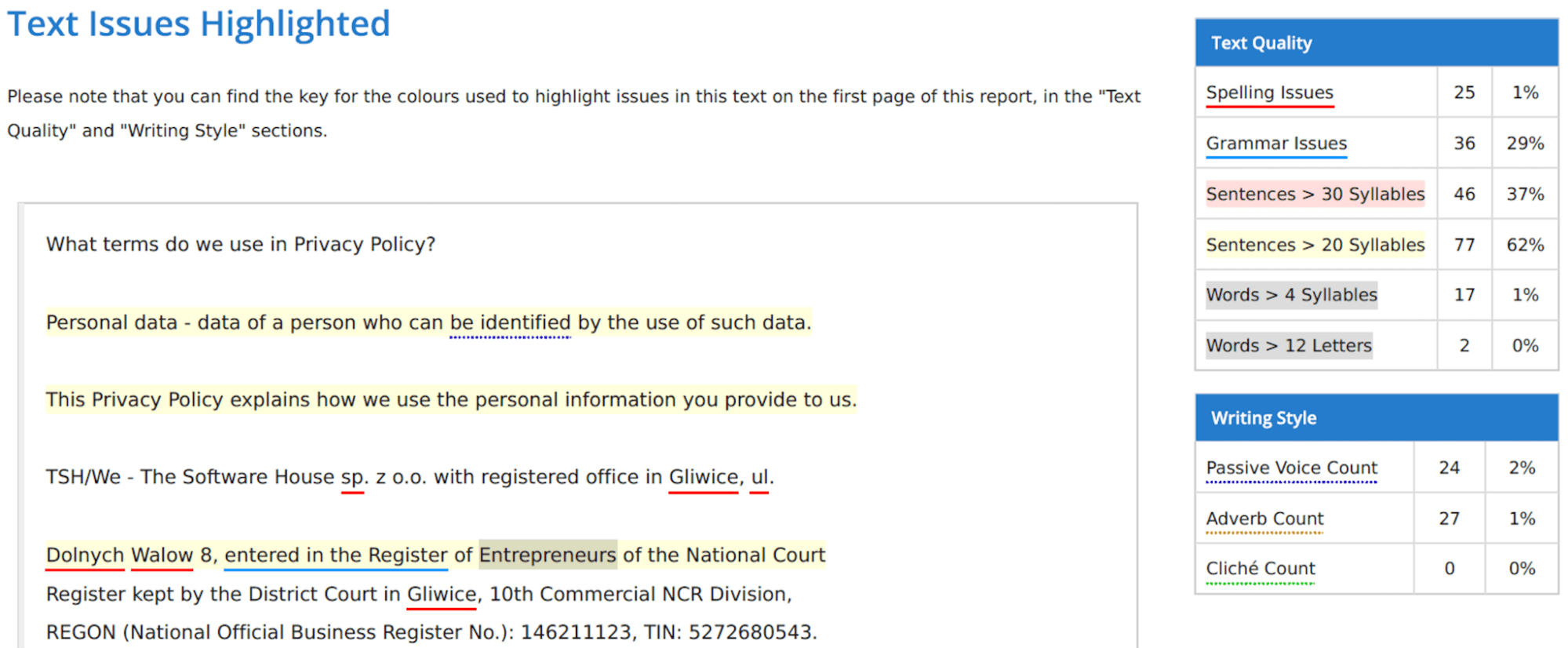

Other than these general improvements, you can also use a readability score tester to find out if your text content is easy to follow. I made the test in three different ways:

- by pasting a URL directly,

- by exporting the text to a file,

- by copying a piece of text.

Interestingly, the results slightly differ depending on the method. The direct method got the lowest score of 78%.

Then, I exported the whole policy and uploaded the file into the program. This time, I got 79 %.

Finally, the copied and pasted version received 82%.

It seems that the tool is not ideal, but it does provide a general understanding of how readable your text is. You can also learn more about its tone. The one I uploaded turned out to be quite formal, somewhat positive, and slightly personal. You should also account for proper names – those were in Polish and they were marked red as spelling errors since the tool uses English.

Given these conditions, the 78-82% score is not too bad. It means that around 66-70% of people are able to read the text.

If you want more, you should learn more about the a11y accessibility testing process from my friends at TSH!

#6 Insufficient Deletion of User Data

⏱️ Frequency: High,

🤜 Impact: High,

🔎 Type: Organizational / Technical

Once the data processing purpose is achieved, personal data should be removed. Unfortunately, it is not always the case. Organizations lack data retention/removal policies. This often leads to various breaches of privacy.

In order to amend it, you should:

- include this aspect in your data privacy policy, track data and document what happens to it,

- determine when data is removed once it helped you achieve your stated goal,

- implement secure looking (extremely limited access to data) when removing such data is impossible,

- consider deleting user accounts when they are unused over a period of time or no longer needed,

- collect evidence so that you can verify if data was removed in accordance with your policy,

- consider degaussing for cloud, that is the cryptographic wiping of archived and backed-up data.

How does it look at The Software House?

TSH response

In each of our projects, we strive to keep the client up to date about our own employee retention. JIRA or Slack accounts of those who leave are swiftly deactivated. We strictly observe a notion that a person who left the company should have no access to any communication channels, environments (even testing ones!), or repos of the client.

On the employee’s part, they are instructed to log out of all the services and apps on their company’s laptops and remove all the content, customization, and configuration. Once this is done, they are to install the latest version of the system.

On the day the employee hands over the laptop, the employee’s access settings as well as email accounts are deleted. If an employee returns to our company later, we make new accounts and provide them with all the necessary accesses from scratch. That way, we ensure the safety of both our own and the client’s data.

Want to learn more about how we acquire and retain developers? Check out this acquisition and retention article from our CEO!

#7 Insufficient Data Quality

⏱️ Frequency: Medium,

🤜 Impact: Very high,

🔎 Type: Organizational / Technical

Sometimes, an organization uses outdated, incorrect, or flat-out false user data. How is that possible?

Imprecise forms, technical errors (during logging or saving), or flawed data aggregation (e.g. related to cookies or social network account integration) may be to blame. Unfortunately, the option to edit or remove data may be very limited in some applications. I personally experienced that when logging in to my Shopee account with a Facebook account – when I wanted to change my payment card details, the app asked for a password, which I didn’t have due to registering with a third-party account.

Testing this particular vulnerability is quite easy. You need to ask the data operator if:

- the personal data they have is up-to-date,

- they control the data’s accuracy by asking the user about it on a regular basis,

- you can edit the data at your convenience.

In short, you need to implement data validation processes (including common copy & paste errors) to prevent collecting and generating untrusted data, make sure to update it (with third parties privy to the data too!), and verify the data with the user when they perform critical actions.

TSH response

We make sure to avoid this vulnerability by storing up-to-date data in secure apps. The employee can change it at any time.

From a law’s perspective, a major change such as updating your name or place of residence only requires sending one statement to the HR department. There is no need for updating the employee contract or making an annex to it. Only the next contract we sign in the future will reflect the new changes. And when they do sign it, they now might use a safe and convenient one-click e-signature offered by providers such as Autenti.

In addition to that, we also ask each employee to verify their place of residence each time we send them documents or equipment.

#8 Missing or Insufficient Session Expiration

⏱️ Frequency: Medium,

🤜 Impact: Very high,

🔎 Type: Technical

If you don’t take sufficient session expiration measures, your users may end up giving you more than you need without even knowing. Typical reasons for this vulnerability include not setting a session duration limit or hiding the logout button.

If the session is still active and someone else gets access to the device, they might even still or manipulate the original user’s data.

The easiest way to prevent this is to implement the automatic session expiration mechanism. Its duration may vary depending on the type of application or the importance of the data. You might also set a session duration limit (no longer than a week and as short as 10 minutes for many banking apps). You might consider letting your users set the numbers themselves.

TSH response

All of these best practices mentioned above are also a standard in our projects. The Software House’s QA team always makes sure to test these simple session-related measures. The security team double-checks it during its own audits.

#9 Inability of Users to Access and Modify Data

⏱️ Frequency: High,

🤜 Impact: High,

🔎 Type: Organizational / Technical

When the user can’t access or modify their data, including data stored by third-party providers, with their own user input, you’ve got yet another vulnerability – broken access control.

You can make the data available for modification primarily through:

- a user account,

- a special form,

- or an email.

It’s vital that you make sure that data modification requests are resolved quickly.

TSH response

On our end, this particular topic has already been covered well above in the vulnerability concerning insufficient data quality. Other than that, we follow the very same best practices mentioned in this section.

#10 Collection of Data Not Required for the User-Consented Purpose

⏱️ Frequency: High,

🤜 Impact: High,

🔎 Type: Organizational

Each piece of data you ask for may pose some sort of privacy risk. Because of that, it’s highly recommended that you don’t collect any data that you don’t absolutely require. If data is divided into primary and secondary in terms of importance, you need to make it clear to the end user. You also need to update the information if you change the priority classification of a given piece of data.

A good example of that vulnerability is an e-commerce platform that provides personalized ads by default, with no regard for user consent. Instead, the user should first opt-in to receive such content. Similarly, when a website collects emails in order to confirm orders, it should explicitly ask if it also wants to use the email in its newsletter campaigns.

TSH response

At The Software House, we always create clear definitions for each of the data processing goals, well before any actual data collection starts. We recommend taking as little user data by default as possible. If the client wants to collect more, it should ask the user for permission explicitly.

Conclusions – prepare yourself for OWASP Top 10 Privacy Risks

The latest vulnerability list from OWASP shows just how many privacy threats wait for both app creators and users.

In order to protect your business, not only do you need proper measures at the development level, but also at the organizational level.

We covered the former in detail above. As for the latter, The Software House continues to improve in various ways, especially by:

- implementing industry standards such as GDRP or ISO,

- writing good privacy policies,

- organizing and developing a dedicated security team,

- conducting security audits and vulnerability checks to find problems and raise general awareness of these issues,

- educating our employees through onboarding, presentations, or a newsletter,

- keeping the QA team up to date with all the latest security trends (regarding both web apps and APIs),

- developing a best practices checklist (based on ASVS – OWASP’s very own Application Security Verification Standard) designed so that each employee can control app security on their own.

Not only do all of these measures minimize OWASP Privacy Risks, but also improve the overall quality of our software products.

We recommend you do the same.

And when you search for a software provider to help develop your next application...

… pick one that has already made the effort. After all, the security of your clients is at stake.