09 July 2019

What can web developers learn from the expensive mistakes of the space industry?

All web developers make mistakes. Sometimes it’s a badly chosen tool, sometimes it’s a bug deployed to production. Smart people say the key is to always learn from your mistakes to never repeat them again. Yes, but we can do an even better job than that! We don’t need to learn only from OUR mistakes, we can look for wisdom and experience from other developers too. Or people from other industries, let’s say… THE SPACE INDUSTRY. Even the sky shouldn’t be a limit, right?

So, in this article, we will look at some mistakes that were made and lessons we can learn from them. If you think that building rockets seems to be totally different from web development, it’s OK. However, it’s also possible to find common ground and find things that will be useful in your day-to-day work. And believe me, I’d know since it’s hitting close to home – after programming, the space industry is my second pet topic. My Quality Assurance colleague has already proven there’s a thin line between appropriate testing and spaceships lost in space. Now it’s web developers’ turn!

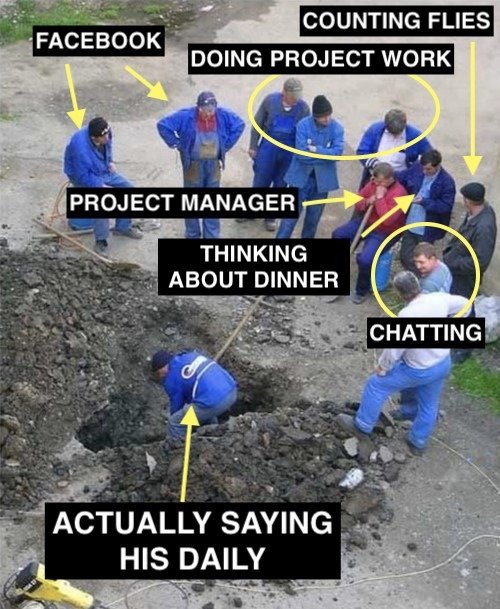

Keep the whole team up-to-date

One rule of the Scrum methodology is to have daily meetings to determine what we’ve already done, what difficulties we encountered during our work, and what we plan for the future. A lot of software developers disregard daily meetings because they think that they don’t need to know what other people in their project actually do. Sometimes it comes from being too focused on your own work, sometimes just from trying to just rattle off the whole meeting.

Regardless of the actual reason, the daily meetings bring on important value upon others for the developers: it allows them to know what is currently happening in the different part of the application which may or may not have an impact on their work.

Unfortunately, developers don’t always appreciate how important it is. Locked communication is the first step to dooming the whole project. It won’t matter how good is your work if someone changes the system and breaks the whole thing. You don’t believe me? Well then prepare yourself for one heck of a story about…

The engine failure during the Apollo 5 mission

Apollo 5 mission was one of the most important test missions before sending a human to the Moon. It was the first time the moon lander was sent space to test if all modules work as expected. One of those modules was the DPS (Descent Propulsion System) responsible for the safe landing on the silver globe using a liquid propellant engine. DPS had a computer which controlled the engine status, for example, it was programmed to check whether the engine ignites correctly in the given time. If not, it would turn off the engine to prevent any more problems from occurring. Now, the whole system had to be tested in space.

The mission’s launch went well, and the lunar module reached the correct low earth orbit. After doing the initial tests the mission control team reached phase 9: launching the main engine for the first time for exactly 38 seconds. Yes, you’ve guessed it – it didn’t go well. If you were in the mission control room that day, you would hear an announcer voice saying: Engine on and Engine off just a second later, a lot faster than expected.

What happened? As it turned out, it was the DPS computer that made an emergency shut down of the engine just a moment after ignition started. After being given a fair amount of time, the engine thrust wasn’t enough to satisfy the computer algorithm constraints. In the official documents, you will find a note that all this mess happened because the pressure in lunar module tanks was too low. Low tank pressure means slower propellant movement and that leads to slower engine power raise. The computer software didn’t take that into account which finally resulted in an engine-off command. However, Don Eyles, an Apollo mission software engineer thinks the problem lays elsewhere – in his paper, he states that it was NOT a software error.

As long as there’s communication, everything can be solved

So what did Eyles noticed? A few seconds before DPS engine starts it’s being armoured. It means that a valve between the fuel tank and a propellant manifold is opened to allow the fuel to go through the manifold and reach the second valve which now separates the fuel from the engine combustion chamber (a place where fuel is burned with oxygen). Unfortunately, before the mission started the second control valve was suspected of being leaky. If it was true, a small amount of fuel could have entered the combustion chamber after the engine armouring but before the ignition. As a result during engine start, there would be more fuel in the combustion chamber than expected and that would end with a destructive explosion.

To work around this problem without disassembling the whole module, the engineers decided to skip the process of armouring the engine and to open both valves at the same time in the exact moment of the engine start command.

It wasn’t a bad idea. The problem was they forgot to tell about it the software engineers who worked on the DPS computer.

From now, at the moment of ignition, the propellant had to go through the whole manifold to reach the combustion chamber. It took time and delayed the engine’s proper start. The computer didn’t know about this time offset and expected a certain level of thrust after the given time as usual. As it didn’t happen the computer suspected the engine of being faulty and it automatically turned off.

Later on, the second test attempt was successful because the computer was turned off for it and the ground control team conducted the process by hand. As a result, the software engineers were blamed for the problem. Don Eyles says everything could have been easily redeemed if only the programmers knew about the change in DPS fuel system.

So, was it a problem with communication? Probably yes. We can’t expect such massive teams building a moon lander to have daily meetings but nonetheless, this example shows us how crucial is to communicate all changes to all the people involved.

On software development micro-scale, daily meetings help us to share information about last-minute changes. It’s important not only to listen but also to honestly inform others about your work and ask about theirs. It helps to raise concerns before everything goes into space… I mean – on the production server. Finally, everyone should know the overall state of every other component to align their work together. Programmers can’t close in their own “task bubble” and need to be aware of things that change around them because you know – everything’s connected.

Never assume that things will work

There’s saying that a lazy programmer is a good programmer because they will always find the quickest way to the solution.

Jokes aside, let’s talk about one of the aspects brought to you by the lazy approach. Developers often assume that if something worked in one situation, it will also work in another. I wouldn’t say it’s irresponsibility but rather not taking all factors into account. Changing environment, different dataset, a new collection of modules surrounding the one you are reusing – every change matters and can make your battle-tested solution faulty.

It doesn’t mean you should test a module line-by-line every single time (there are unit tests for that) but rather think what will change in the module surrounding that can influence the algorithms. We don’t spend enough time on it, and we tend to forget that some changes can have massive consequences in places we would never think of. Just like the Ariane 5 maiden flight…

Can you hear us, Ariane 5?

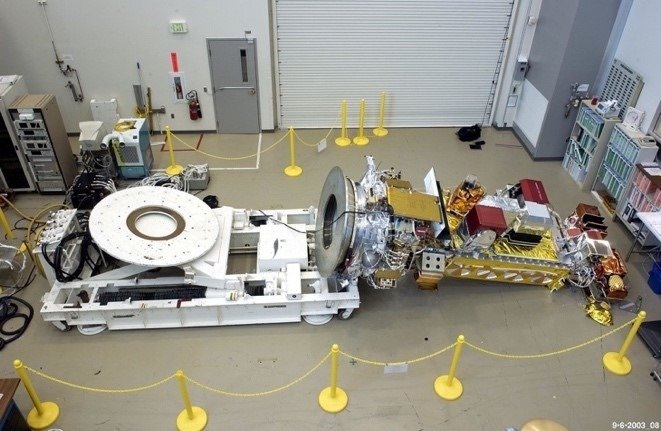

It was the year 1996 when the European Space Agency wanted to extend its fleet with a brand-new construction and make the first launch of their new Ariane 5 heavy rocket. During the maiden flight, the plan assumed taking into orbit a constellation of four ESA satellites named Cluster. They would definitely research the Earth’s magnetosphere, if only they had a chance to start at all. Unfortunately, 37 seconds after the rocket’s launch everything went south because of one “it will work for sure” too much.

The Ariane 5 had two copies of an Inertial Reference System (RSI). Each copy of the system had a computer connected with its own laser gyros and accelerometers. Such redundancy is common in rockets architecture in case of a hardware failure. Both RSI work at the same time, but the data is taken only from one of the copies. If the active device fails, then the second is hot-swapped and continues the calculations. Problem is that although the rocket has a redundant copy of the RSI, both devices have the same software installed.

Now let’s think for a moment. If both devices work simultaneously and both of them get the same data from external sensors what will happen with them in the case of a software bug? A bug handled so badly that will make both systems crash at the same moment. Well…

Inertial Reference System and AligmentFunction

To understand what happened, we need to learn a little bit about the Inertial Reference System. Ariane 5 had the same RSI as its predecessor, the Ariane 4 rocket. RSI worked there, so it was moved to the Ariane 5 project as a black box together with its code. As a result, features that were written in code for Ariane 4 were also present in the Ariane 5 project. Including a special function for calculating the rocket’s body alignment until the lift-off happens. For the sake of readability let’s name it AligmentFunction. Normally AligmentFunction would be turned off about nine seconds before lift-off because it isn’t useful any more. However, until this function works it provides good measurements. But if you turn it off it becomes uncalibrated. Now if you want to use AligmentFunction output again, you’ll have to wait for 45 minutes or more for a full recalibration!

It can be a problem in case of late countdown hold, somewhere between nine and zero seconds before lift-off (which happens not so rarely for various reasons). Most space missions have a limited time window when they can launch, so having the rocket quickly prepared for a next attempt can be crucial for the mission success that day. A solution was needed for this calibration problem and it was decided not to stop the AligmentFunction for 50 seconds more. If anything happened and the launch was aborted during the final countdown the mission, the control team would have enough time to safely restart the RSI without forcing it to be recalibrated. If the launch goes smooth, the AligmentFunction will automatically turn off during the first minute of flight.

The “extra 50 seconds” feature was also present in Ariane 5, but it was totally unnecessary – changes implemented in preflight operations (in comparison with Ariane 4) made this feature redundant. What’s more, the RSI code was never tested with Ariane’s 5 real data, nor the system requirements were changed for the Ariane 5 flight profile specification. And the key differences between Ariane 4 and Ariane 5 flight profiles were the higher values of horizontal velocity during flights of the second one. The AligmentFunction was getting those numbers as parameters and, as a result, it ended with a number overflow in the 35th second of flight. At that specific moment, AligmentFunction tried to cast a huge 64bit floating-point number to a small 16bit signed integer variable which ended in a catastrophic Operand Error.

From this moment everything went fast. First, the whole active RSI went into a failure state. The On-Board Computer (OBC) tried to switch to the backup RSI but it was also already in a failure state. Why? Because of the exact same reason: its alignment function crashed on Operand Error too. Of course, OBC had to have some telemetry data during flight so it blindly took anything that was available from SRI. Unfortunately, during the failure state, SRI was providing OBC nothing more than an error code which was designed sorely for debugging purposes.

Surprisingly, the OBC interpreted the code as normal valid data and, less surprisingly, concluded that the rocket is in the wrong position.

The on-board computer tried to fix that (as it thought) incorrect flight vector and commanded a sudden raise of the flight angle. When air resistance hit the tilted Ariane 5 body, it broke into two pieces. Finally, in the 39th second of flight, the autodestruction system detected unrecoverable problems so it blew the rocket up. The first of its series, Ariane 5 rocket ended up exploding in the air and covering the sky with burning debris falling on the French Guiana territory.

This shows how one assumption drove the whole mission to an unprecedented failure.

Making assumption is convenient but also dangerous

Do we really know the whole module from top to bottom to assume it will work exactly the same in the new and the old projects? Do we know what hidden operations are under the hood? Also, will the module input and environment be the same between projects? If the answer is yes, what will be the impact? So, there is a lot of questions here, but you should ask them every time you move a module between projects. Mostly we skip it because often nothing wrong will happen. But are you brave enough to eventually face the results of your wrong assumption after the application goes live?

This is not the only thing we can learn from Flight 501. As you probably remember, the AligmentFunction was running longer only to increase the chances of launching the rocket during the start window in case of countdown hold. A piece of code that was only running for the convenience of the mission control team and to eventually to please the client with launching on the first possible date.

In our small web developer’s world, we often do similar things. We add small parts of code just in case. They often duplicate some work or make sure something happened – like removing a directory at the end of the script, even if it shouldn’t exist at that moment because one of the previous commands should have already deleted it.

In that case, you should ask yourself is it ALWAYS safe to do it? Maybe you should better analyze the script flow to make sure the folder won’t exist? Maybe you should reorganize? Every additional line of code means more place to fail. No code should be left to just be, everything should have a purpose. If it doesn’t cause problems, it still could confuse the developers who will maintain the code in future. And as the popular developers’ saying goes, we should code like the future maintainer of our code was a serial killer.

Always update the documentation

There’s also a second famous saying – bad documentation is better than no documentation at all. However, from my experience, this isn’t always true. Outdated documentation can sometimes bring unimaginable frustration. Let me explain – when you have the list of instructions on how to run a new project locally, you can follow it step by step. If something doesn’t work as expected, you will be in the middle of a process and wonder what is to blame. Maybe you did something wrong or maybe there’s something incorrect with your environment? Maybe the process changed, and the documentation had errors?

When conducting an investigation to find where the problem lies, you’ll probably rely on documentation the most, because it should be correct, right? Developers always naturally assume that if something was written down then it should be valid. Because hey – someone put an effort and precious time to create that document after all.

However, if during your investigation you find out that documentation is faulty, you will feel lied to and cheated. Depending on the size of your problems with documentation, you may even start asking why you started using docs in the first place. Maybe you would be better off checking available commands in the Makefile? Eventually, maybe you should just do a standard operation like creating the env file and executing `npm run start`? Outdated documentation can bring you a lot of pain so that’s why it’s important to care about it whenever it’s required. There is another story from the space industry connected with it.

Hey satellite, did you enjoy your trip?

The Americans National Oceanic and Atmospheric Administration puts a lot of satellites in space. One of them was NOAA-19 specialized in the weather forecast. It was built by the Lockheed Martin Company and had to work on the polar orbit for 2 years. Everything went smooth until September 2003, when something “impossible” happened. The 239 million dollars worth satellite simply tipped over from its turn-over cart in the factory!

The report stated that 30% of the satellite parts were damaged. But how could this happen? A NASA investigation revealed a lot of problems in the work culture of that particular facility. Normally when you are working in the space industry you will get used to a very specific work methodology. Every tool taken from the toolbox has to go back to the exact same spot before the end of the day, in order to avoid the risk of leaving it inside the satellite or rocket. Every important operation is closely watched by your colleagues, so you never do anything carelessly. Sometimes they even say at loud what’s happening so they wouldn’t lose focus. And finally, every completed task is written down so others can check what was happening. The last rule is the most important here because failing to respect it was a direct cause why NOAA 19 hit the floor.

In the after-crash report, NASA confirmed that when the satellite was in storage, one of the workers removed 24 bolts that were keeping the device fixed to an adapter plat – a part of a turn-over cart. Those kinds of cart allow to easily move the assembled satellite into different positions, mainly between horizontal and vertical. The bolts were removed to move the satellite from the cart later on. Problem was however that the worker didn’t document this fact and when other employees came to do their job, they looked into the documentation and they didn’t see anything suspicious. Also, they forgot to check If the bolts are in place which was another violation from standard safety procedures. So when they moved the cart the satellite smashed causing a 30 million dollars’ worth damage. It was a huge price to pay for forgetfulness. The satellite was later repaired and successfully launched into space under the name NOAA-N’.

This case shows that there should be no tolerance for NOT updating the project documentation. Of course, in web development stakes are never so high but that doesn’t mean we shouldn’t learn a lesson from it and be sloppy.

Documentation should be treated as a contract.

If your team decides to write something down once, then everybody is obligated to keep the information up-to-date – no exceptions. It’s not so important if it is a README.md or description in StoryBook. Programming is a specific type of teamwork where we not only cooperate with people from the current team but also with anyone that will join in the future (even after you’re gone). So, remember – respect your documentation. The success of the newcomer developers and future maintainers depends on it.

Over and out – is space industry really relevant to web developers?

Today we’ve learned three interesting histories from the space industry. And it’s pretty clear that comparing them to a web developer’s day-to-day routine, we can find some things in common.

Some may say that those examples were far-fetched and such dramatic things never happen in developers work. True but that’s not the point.

I only tried to present that pretty innocent mistakes can lead to massive problems. The same happens in a web developer’s life – may be on a smaller scale than blowing up a fully tanked rocket but I am pretty sure that crashing a popular website will upset millions of users and damage their business!

If you liked the article or have some comments, find me on Twitter (@marcingajda91). Maybe someday I will write another article with more stories from the space industry? Until then, thanks for reading!