07 May 2020

Post-webinar Q&A: Serverless examples of AWS Lambda use cases

Recently, we held an online webinar with special guests: Bogusław Buszydlik, Michał Górski, Mateusz Boś, Wojciech Dąbrowski – AWS Lambda and serverless stack specialists from Rozchmurzeni blog. It focused on practical serverless examples from real-life projects and AWS Lambda use cases.

Since the whole event was conducted in Polish, we decided to summarise the conclusions and questions from the webinar’s Q&A panel and present them in this article.

Why AWS and not Google Cloud Platform?

At the very beginning, we used the Azure cloud and we switched to AWS in 2016. The main reason was the technological advantage of AWS at that time.

At the moment, AWS is so popular on the market not only because it’s the main service provided by even more popular Amazon, but also because it’s flexible and adjustable.

Google Cloud is trying to catch up and most AWS services already have their counterparts there (sometimes they are even cheaper).

However, AWS has everything we currently need, and its pace of development and popularity allows us to assume that there will be no need to change the supplier in the upcoming years.

When do we talk about asynchronous and synchronous Lambda call?

Lambda can be called in two modes – request-response and event. The first is called synchronous call and the second is asynchronous. In the first case, you wait for a response from Lambda, and in the second you only send a request without waiting for the answer.

Each Lambda can be called manually in both modes but it is worth knowing that if some AWS services call our Lambda, they usually do it asynchronously.

Since there are problems with one global queue to all Lambdas for asynchronous calls, are there any possibilities of separating N queues for groups of individual Lambdas?

Instead of directly to Lambda, queries can be sent to SQS and the Lambda service will consume data directly from such a queue. AWS describes how to connect it here. Separation can be done by running application groups on different AWS accounts.

This solution may seem like a big effort but it provides full isolation of resources. AWS Organization will help you to manage such accounts.

Is there any easy way to log from AWS Lambda to Elasticsearch?

AWS provides a subscription mechanism in which another Lambda (Kinesis Stream or Kinesis Firehose) is connected to your Lambda’s log stream. These further filter and process logs and send them to Elasticsearch. The precise method is described here.

💡 Read more: Elasticsearch, how to use it properly and how does it work?

What is your approach to testing serverless applications? Do you use mock/stubs, test in the cloud?

Application logic can be successfully tested with unit tests. In addition, we use integration and component tests, according to the division proposed by Tobias Clemson. Additionally, we create service tests to check the functionality of a given stack from beginning to end.

Testing permissions is a bit more complex. SAM Local helps in simulating the launch of our functions but it’s difficult to recreate more complex configurations with it. We advise creating your own version of the stack on your development account.

To make this possible, you must describe the site in a proper way using CloudFormation. In the case of serverless applications, the costs of such tests are negligible.

If we transfer RDS to a public subnet, where do we put the firewall?

In this case, Security Group for RDS or NACL for a subnet will work as your firewall. Remember that placing RDS on a public subnet won’t automatically give it a public IP, and without it RDS won’t be available from the public Internet.

To make the database available you must first switch the Publicly Accessible parameter to ‘yes’. Only then will the access rules imposed by the Security Group or NACL come into effect.

Is it possible that because of RDS proxy, Lambda causes a lack of data consistency?

Of course, Lambdas can write and read data, so you need to be aware that eventual consistency does occur. However, database synchronisation is done by AWS which has a very low delay that should not exceed 100 ms.

Looking at the RDS architecture you have two places where you cause eventual consistency to get other benefits:

- deployment of instances in various Availability Zones and/or Regions ensures high availability

- horizontal Read Replica scaling increases performance

RDS Proxy itself is only a service supporting the management of the RDS connection pool and has no impact on data consistency.

Do you somehow monitor „eventual consistency” assuming replication of “read-only” databases?

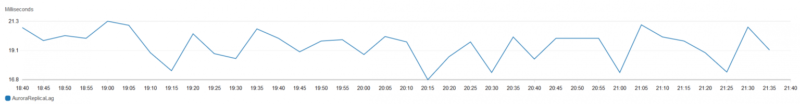

Replication delay can be monitored in two ways: directly on the base using query or via CloudWatch Metrics. CloudWatch Metrics has over 40 metrics available for the cluster. The one we need in this case is ReplicaLag.

If you want to perform an action when you exceed a certain threshold, use CloudWatch Alarms. It displays information on SNS which can be consumed in several ways, including sending an email. Another option is to call Lambda – and then sky (and Lambda’s possibilities) is the limit.

💡 Read more: Q&A serverless for beginners

Is the combination of Lambda + RDS so frequent in projects? These aren’t services that work well together; if you need a relational database, why not use Fargate?

Our experience shows that the Lambda + RDS combination can be successfully used in some cases. Foreign conferences and presentations (including official AWS presentations and materials) seem to confirm this thesis. In addition, one of the latest services mentioned during the webinar – RDS Proxy, was created in response to such a configuration.

Fargate also corresponds well with the need to create a web application that is highly scalable and available. Each case must always be analysed individually and adapted to the requirements.

In our solutions, we often use Lambdas without connecting to the Gateway API. A large part of the solution costs is eliminated in this configuration. A more detailed analysis of the Fargate vs Lambda comparison can be found here.

Watch online webinars organised by The Software House here 📺

Does the CQRS approach support generating and returning an ID from the database as a result of the command?

Everything, of course, depends on the implementation. The industry is divided in this respect and according to the “canonical” approach, you shouldn’t return any of the commands.

On the other hand, if we take a closer look at the purposefulness of this rule, we’ll see that we shouldn’t return broadly understood domain data. We can successfully return information such as the command result, errors or the number/identifier of the new version of the modified object.

Is there anything like PoolConteners for RDS? Something similar to ThreadPool or JDBC pool in C3PO?

RDS itself doesn’t provide connection pool management capabilities. A new service, RDS Proxy, responds to this need. Remember that it is currently in the preview version. You can find more about it here.

You „warm-up” every X minutes to avoid cold starts. Isn’t it cheaper and easier to go from serverless to classic services that can also be scaled successfully?

As usual, you can say “it depends”.

Starting from the warming up itself. If you need to warm up Lambda, then you can assume that the architecture you’ve chosen is not optimised. Our warm-up resulted mainly from using Lambdas in combination with VPC which caused a cold start of even a few seconds.

AWS had to put ENI, i.e. a bridge to our VPC. Fortunately, the problem no longer exists. You can read more on it here.

When it comes to scaling itself, the main difference of the AWS Lambda-based solution compared to the server application is that Lambda allows you to scale individual pieces of the application down to zero (and it does it very quickly).

This solution will work primarily when the load on the application is uneven over time, i.e. the application works only at certain times or the individual elements of the application scale in a different way.

An additional advantage of the serverless application is the total lack of server infrastructure management – you throw in the code and that’s it. A good example of using serverless will be the invoicing process. It’s run once a month and individual elements can be performed simultaneously and scale horizontally.

If you’re dealing with a constant and continuous load of the entire application, it will be much more efficient and, above all, cheaper to place a container permanently.

💡 Read more: Create REST API, deploy it on a server and connect it to the database in just 5 minutes? Check it 👇

Why is cold start Node.js so short, compared to, let’s say, Go? Why are compiled languages slower than interpreted?

We are talking here only about a cold start, i.e. delay in handling the first query. In Java and .NET, this is due to the fact that these platforms use just-in-time compilation.

The machine code is only compiled when the application is started. However, we’re not in a losing position – there’s a possibility to shorten cold starts in.NET Core using Lambda Layers and pre-jitting techniques.

The Go language, unlike the ones mentioned above, is compiled straight into the machine code which lowers cold starts. They are only slightly worse than interpreted languages (native support for Go appeared relatively recently) but the future looks brighter.

If we look at the overall performance, compiled languages perform better than interpreted ones – as predicted. You can learn more from this article comparing AWS Lambda Node.js, Python, Java, C # and Go performance.

We are here for you! 🚀

If you need our specialized services, let us know and schedule a free one-hour consultation ☎️