21 June 2023

How advanced cloud really affect the project budget? Real-life comparison of AWS Lambda performance in various configurations

No more overpaying for cloud resources! Today, I’ll show you different variations of AWS Lambda performance and their impact on your budget.

Talking to machines has become as normalized as talking to other people – you can communicate with almost every piece of tech: sensors, IoT devices, and other web services. To provide a failure-free, edge-to-edge communication each link in your chain must work flawlessly to achieve highly available and scalable services, capable of handling necessary traffic at the lowest possible cost.

The right choice depends on the use case

That’s where cloud providers like Amazon Web Services come to play, offering various solutions to easily deploy your applications and expose our APIs – in this case, AWS Lambda, AWS App Runner, and AWS Fargate. Each has its strengths and limitations, so before choosing, research which one suits your application’s usage model best:

- App Runner is great when you expect high traffic lasting only a few hours during the day.

- Fargate with its scaling capabilities is a go, if you predict the traffic to be continuous, or if it has a pattern.

- Lambda is almost perfect for short and periodic jobs.

However, from my experience, AWS Lambda is a way more powerful tool, capable of handling much more than just cron jobs.

Take a closer look with me!

A brief explanation of Lambda

According to the official AWS documentation, AWS Lambda is “a serverless, event-driven compute service that lets you run code for virtually any type of application or backend service without provisioning or managing servers”.

Simply speaking, you can mainly focus on implementing the business logic and AWS will take care of the rest.

Lambda is easy to configure. When your code is ready, you only need to specify a trigger (e.g. an HTTP event or a cron schedule), make sure that a proper IAM (Identity and Access Management) role allowing the execution of Lambda is assigned to it, and you are ready to go.

How do Lambdas work?

Lambdas are executable functions nested in small, hermetic containers using one of the available runtimes to execute your code. You’re free to choose from such runtimes as NodeJS, Python, Java, and Go, or even build a custom one. Thanks to that, the entry point to AWS Lambda is quite low, as it usually supports popular programming languages that people feel the most comfortable with.

Once a Lambda is triggered, before its initial execution, there’s a slight delay called a “cold start” – the time that a lambda needs to warm up, and after that, it stays alive for 15 minutes. During these first 15 minutes after Lambda execution, you won’t encounter a cold start anymore, and your Lambda will execute faster.

A runtime isn’t the only thing you can configure to fit your needs, including:

- defining available memory,

- attaching it to a VPC (Virtual Private Cloud),

- setting provisioned concurrency,

- maximum timeout (HINT: when Lambda is attached to AWS API Gateway then its maximum timeout is 30 seconds; if not, the maximum timeout is 15 minutes. These two are impassable limits).

How is this seemingly small function capable of handling high traffic?

Imagine a single lambda that needs to handle 10 concurrent requests. It’s impossible, because of its lifecycle – first, it needs to warm up during the cold start, then execute the function, and only after that it’s ready to handle new requests. Fortunately, thanks to provisioned concurrency AWS allows you to spawn up to 1000 Lambdas (it’s a soft limit) for concurrent executions. Keep in mind that until some of these newly-spawned Lambdas finish their execution and are ready to handle the next request, each spawned Lambda is going to have a cold start.

The purpose

I mentioned before that Lambdas’ design is perfect for periodic and background tasks, e.g. file processing. Lambdas’ ability to scale up quickly will come in handy when you encounter an unexpected traffic peak. Furthermore, Lambdas’ hermetic nature makes it possible to use them as separate threads of a bigger process.

Would you like to build a simple API or just make an application’s POC? Lambdas are a great choice for that too. When AWS Lambda is used together with other AWS services (e.g. AWS Step Functions) it can even be a part of way more complex scenarios than simply handling events. As you can see, tons of options.

Read more about AWS Step Functions from Adam Polak, our VP of technology:

AWS Lambda pricing

AWS Lambda is an insanely cheap service.

When there’s no traffic and your Lambda just hangs up without being triggered, you’re not charged at all. The only thing you pay for is the number of requests served and the total computing time rounded to one millisecond. Total computing time (or Lambda duration) shows how much time a Lambda needs to execute (cold start is not included). Let’s say, if Lambda executes in 323.63 ms and its cold start is equal to 376.64 ms, you are billed for 324 ms.

However, there’s a little catch. AWS Lambda pricing depends on the memory you allocate to your functions. The more memory you assign to Lambda, the higher the price of 1ms is. No need to worry though, the difference isn’t excessive, and increased memory greatly improved Lambda’s performance.

The official AWS website is full of calculators and pricing options, so you can see for yourself.

Performance

Lambda with more memory not only executes faster but has a shorter cold start as well. Of course, a lot depends on the business logic – if you connect to a database or perform CPU-consuming operations then you might expect a bit longer execution from your Lambda. Knowing all that, my colleagues from The Software House and I investigated and measured the exact impact on performance with different Lambda configurations.

Testing environment

In order to do meaningful research (rather than art for art’s sake) we used Lambda to perform a simple database query for testing – quite a common scenario in software development. This query should fetch all records from the given table (five records, to be precise). Also, we wanted to know if there’s a difference between Amazon RDS (for PostgreSQL) and DynamoDB. Last but not least we decided to check how big an impact a VPC has on duration and cold start.

To sum up we defined the following configurations:

- Lambda using RDS with VPC

- Lambda using RDS without VPC

- Lambda using DynamoDB with VPC

- Lambda using DynamoDB without VPC

For each configuration, we created three Lambdas, varying in memory size. These were: 256MB, 512MB, and 1024MB. We made benchmarks for 10, 100, and 250 concurrent Lambda invocations. Both databases were available in a single region (eu-west-1), with neither no multiple Availability Zones nor replications.

Code snippets

A handler extracting data from RDS:

A handler extracting data from DynamoDB:

Both of these handlers are based on our serverless-boilerplate that you can freely use.

Detailed configuration of both databases

RDS

- Instance class: db.t2.medium

- Engine version: 12.11

- vCPU: 2

- RAM: 4 GB

- Storage type: General Purpose SSD (gp2)

DynamoDB

- Capacity mode: on demand

- Table class: DynamoDB Standard

Time to crunch some numbers!

Cold starts and durations vs memory size

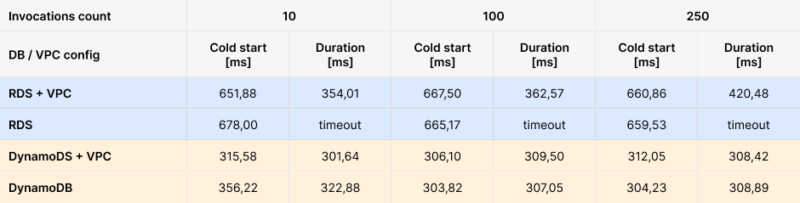

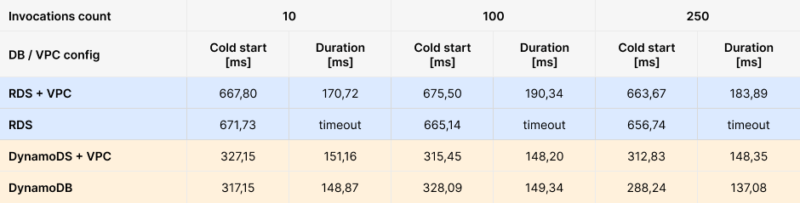

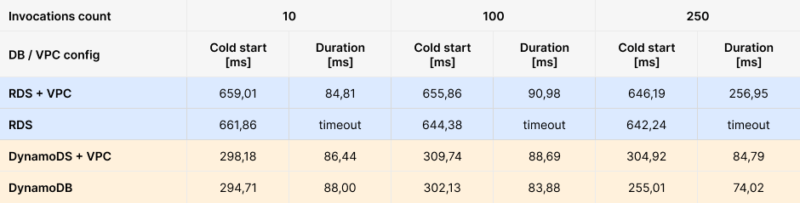

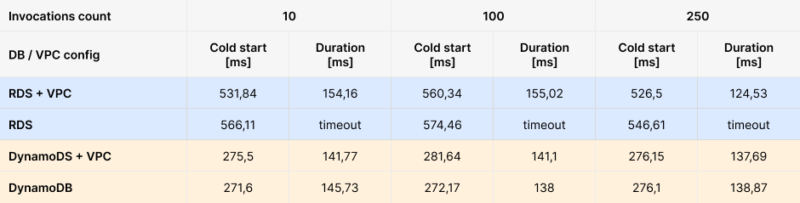

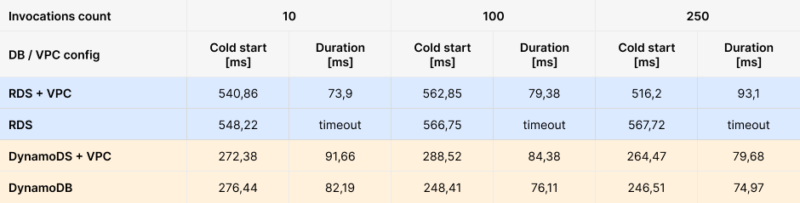

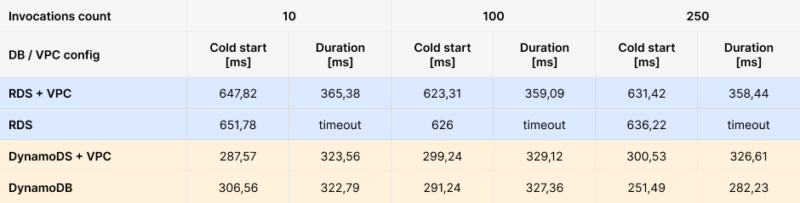

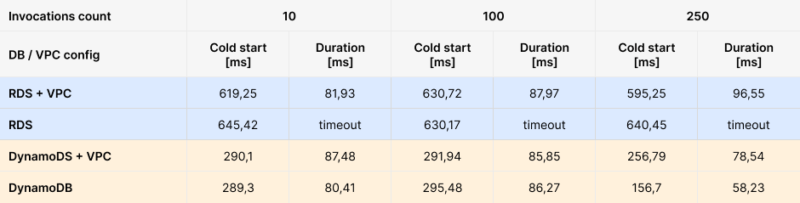

In Tables 1, 2, and 3 below, you can see the results of measured duration and cold start for each memory size configuration.

Results

There are a few things that you can probably spot pretty quickly:

- The cold start of lambdas using DynamoDB is twice as short as the ones using RDS.

- A timeout in Lambdas that use RDS without being plugged into a VPC. That happens because you need to be in the same VPC as your RDS to connect to it.

- The impact of memory size on Lambdas’ durations. If you double the memory, expect your Lambda to execute about twice as fast.

- Except for a little peak in the 1024MB RDS + VPC Lambda, both DynamoDB and RDS durations are comparable.

How do Webpack options affect the results?

However, a database or the amount of assigned memory aren’t the only factors in terms of Lambdas’ speed – a lot depends on the bundler and its configuration. In our TSH-made Serverless Boilerplate, we use Webpack by default. The results shown in tables 1-3 were measured using the default config, so we examined the impact on cold start and duration of two additional options as well: minimize and mode.

minimize: true

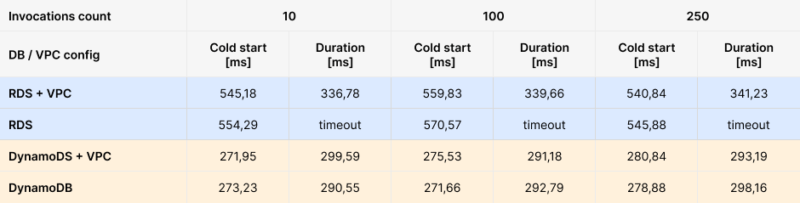

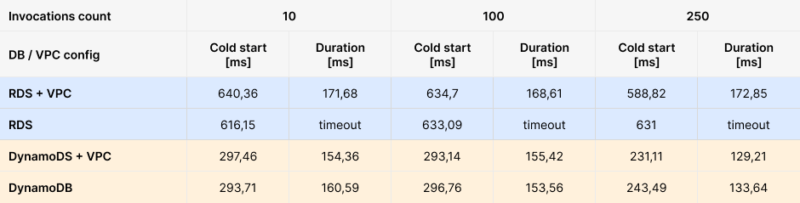

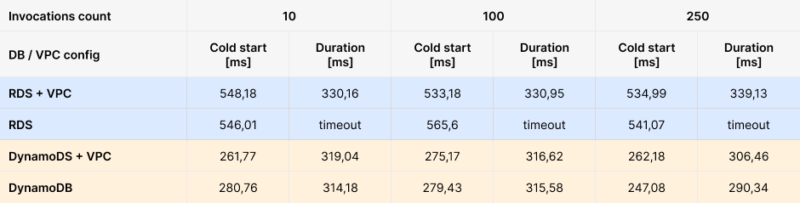

Let’s see how enabling the minimize option affects the original results. After setting the minimize option to true we had to slightly fix the minimizer config because the RDS Lambdas did not work properly. The issue was caused by the wrong table and column names used in the ORM query. The solution was pretty straightforward – enable the keep_fnames in the minimizer options. Tables 4-6 show the results for minimized lambdas.

Results

When comparing the results with the original ones, we noticed that:

- RDS cold starts decreased by about 100 ms with a little improvement in duration,

- DynamoDB had a little improvement in cold starts but no influence on duration.

mode: ‘production’

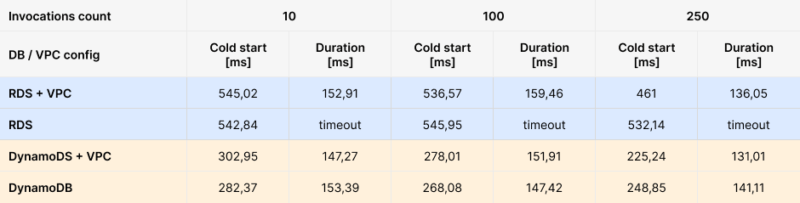

By default, the mode option had a ‘none’ value assigned. Tables 7-9 present the results after assigning a ‘production’ value to it.

Results

So what has changed? Compared to the original values we can see that the performance for both RDS and DynamoDB is a little bit better but the difference is not as visible as in the previous case.

minimize: true & mode: ‘production’

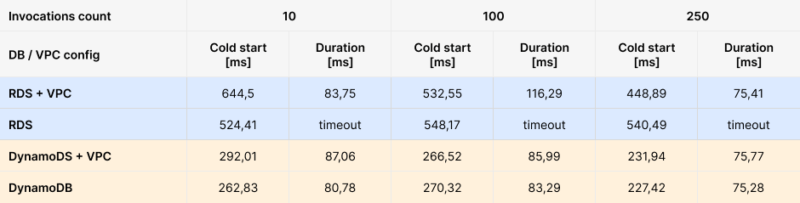

What if we combine both minimize and mode changed options? The results are shown in Tables 10-12 below.

Results

There’s virtually no difference between the numbers above and those measured with just the minimize option set to true. Frankly, we have expected this scenario after analyzing the results for mode set to ‘production’. Nevertheless, we had to check it for science.

Bundle size

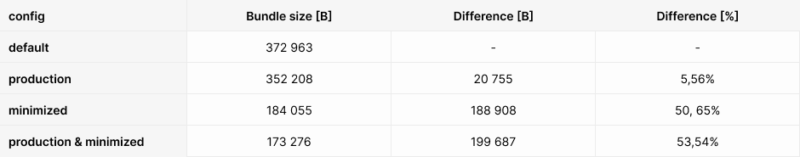

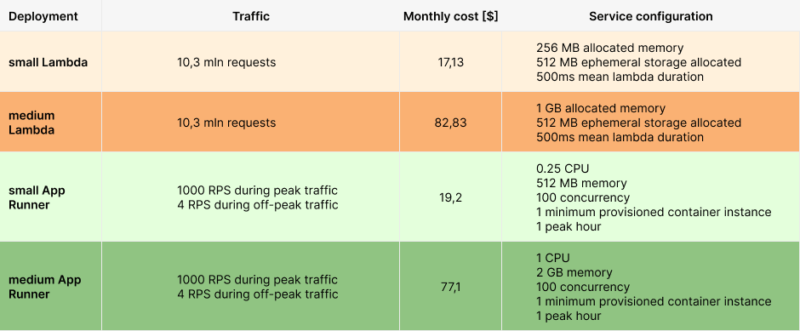

Last but not least – bundle sizes per each Webpack configuration.

The amount of memory allocated to Lambda does not affect bundle size.

In the case of our testing environment, the difference in bundle size was visible between the Lambda connected to RDS, and the one connected to DynamoDB, due to the different codes used to connect and integrate with it. We compared the NoSQL database (DynamoDB) with the SQL database (Postgres). If we were to compare two SQL databases (e.g. Postgres and MySQL) then most likely the only change would be the database URL, without any actual changes to the amount of code itself.

Tables 13 and 14 show how the size changed between different options.

Results

While setting the mode to production did not change much, the minimization reduced the size by about half. Maybe it doesn’t seem so much when we strictly look at the size itself (about 800kB for RDS, and 200kB for DynamoDB), but the percentage difference is breathtaking.

The bigger the initial size of the Lambda, the greater benefits of minimizing the code.

How Lambda affects your budget?

Choosing a cost-effective service for deploying your app is not always easy due to various factors:

- estimated and actual traffic,

- computing power of your resources,

- availability,

- resilience,

- scaling abilities,

- and more.

A great thing about Lambda is its “pay as you go” billing mode – you only pay for what you use with no additional costs. However, it’s a double-edged sword – the more you use, the more you pay.

App Runner offers a different pricing model – you pay for every hour of your application being active, even if it isn’t used by anyone.

In both cases, the monthly bill depends on the power resources as well.

We’re going to make a cost comparison for three cases:

- We don’t know the traffic but we want to be prepared for a high one.

- We know the traffic but we don’t know the schedule.

- We know the traffic without encountering any traffic peaks.

In each case, we assume that the application is deployed in the eu-west-1 (Ireland) region.

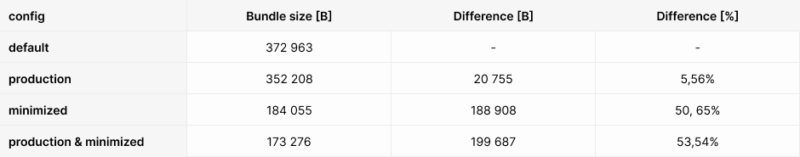

Case 1: Unknown traffic

For this scenario let’s assume we’re an unicorn startup and just finished an app MVP. The business expects about 10 million requests in the first month. However, the advertising didn’t go so well and we only received 2.6 million hits (it’s almost one request per second).

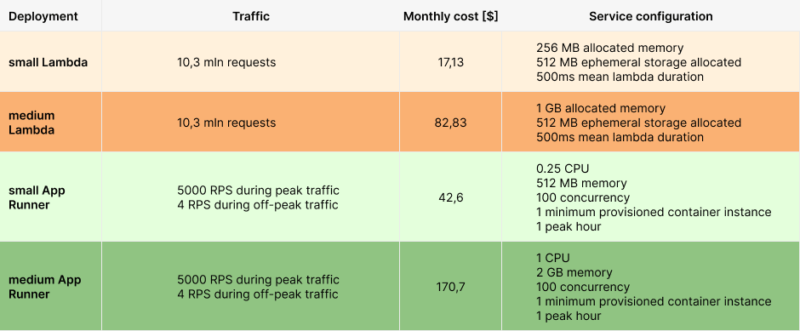

You can see the approximate cost of this scenario below:

Monthly costs have a pretty wide range depending on service configurations (as well as the services themselves).

Lambda is visibly the cheapest one because its free tier includes 1M free requests per month and 400,000 GB-seconds of compute time per month.

Even though the traffic is very low, we will still own App Runner 14$ for its cheapest configuration, while with way bigger Lambdas we pay only 1,5$ more.

The difference? For low traffic, Lambda is a few times cheaper than App Runner with more resources, and over a dozen times cheaper when using the less expensive configuration.

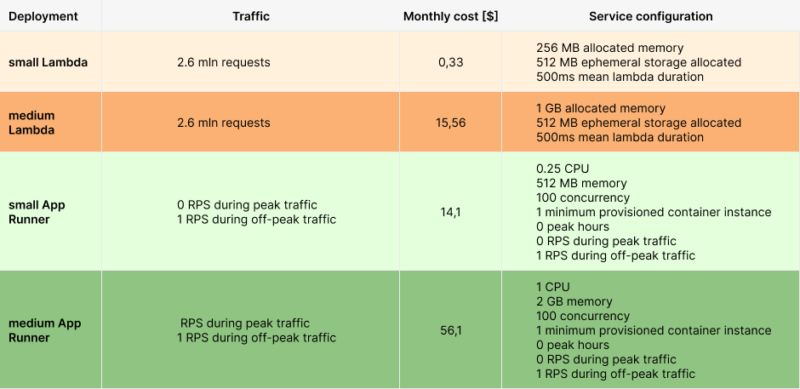

Case 2: Known traffic, unknown schedule

Okay, let’s assume that our business calculations were right after all, and we achieved the desired traffic of 10 million requests. We don’t know our users and their habits too well yet, so we don’t know how and when they will use the app. In the evening, lying in their beds before sleep? Or maybe during a lunch break at work? That’s why even though we know the traffic itself, we don’t know its schedule. With no data, it’s impossible to guess when traffic peaks will occur and how long will they take.

I’ll show you a case where you encounter some, rather small, traffic peaks.

As you can see there are pretty visible differences in costs.

Small Lambda is now only 2$ cheaper than a small App Runner.

In the medium configuration, App Runner starts winning the battle.

In both cases, the difference between the small and medium configurations is fourfold.

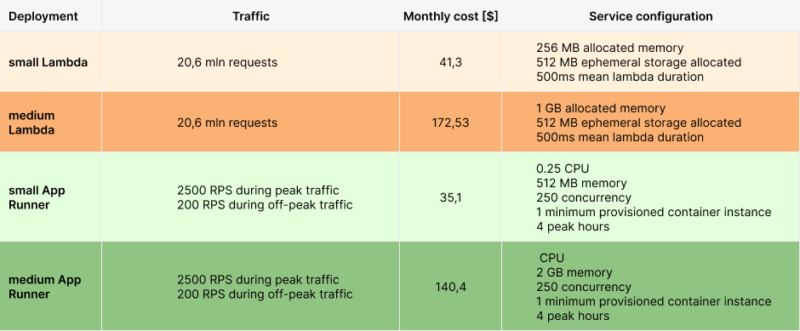

What would be the cost for high-traffic peaks?

It’s hard to keep up with these changes!

With higher traffic peaks, Lambda costs remain the same, the App Runner ones have doubled.

That’s because we’re charged for every additional virtual machine. As long as you don’t exceed the concurrency too much the costs won’t skyrocket but if you do – oh boy, beware of the bailiff!

Case 3: Well-estimated traffic

Knowing the traffic schedule is as important as knowing the traffic itself. In previous scenarios, to simplify our calculations, we assumed that the application scaled on time and there were no downstream. That can result from both – not enough computing power in Lambda, or not enough active instances in App Runner.

How would a perfect scenario prepared for (almost) everything look like then? Let’s increase the traffic to see the difference better.

As expected, this time App Runner was the winner. Not only does it handle a tremendous load but also it is cheaper than Lambda. Although the difference in the small configuration is rather slight, saving 30$ per month for the medium one is a very pleasant surprise.

Although this is an article on how awesome Lambda is, one thing should be clear – it’s not a one-size-fits-all problems. Lambda will save you a lot of complexity and costs but only if used wisely.

The famous “Spiderman” quote “With great power comes great responsibility” fits Amazon Web Services perfectly. AWS (no matter which service you pick) has great power, but the responsibility of choosing the most suitable one for your application is on your side.

What impacts Lambda performance? Conclusions

Congrats, you got through all those tables! Now let’s see about that practical impact on your project.

Lambdas duration

Looking at the Lambda duration results, honestly, there’s no difference between RDS and DynamoDB. The only difference? Cold starts. DynamoDB takes half as long as RDS but, as I mentioned earlier, it is not billed but counts toward the total time necessary to get the results.

Both solutions are very fast and efficient so you should choose by analyzing the purpose of your database because each fits different use cases better.

For example:

- when designing a system with well-structured data with relations between objects then a Relational Database Service is the best choice (the name speaks for itself).

- choosing DynamoDB is worth it when you’re based on simple queries (your database has a key-value structure).

- DynamoDB is also great when you need a very high read/write rate.

Virtual Private Cloud (VPC)

Firstly, it’s necessary to connect VPC to RDS.

Secondly, VPC doesn’t have a massive impact on cold starts. Beforehand we kind of expected that VPC will always extend the duration of cold starts. But according to OUR OWN results, there’s a 50/50 chance here – sometimes Lambdas using VPC had longer cold starts and sometimes it was the other way round. The difference is almost unnoticeable so you can safely use VPC without worrying about the performance.

What’s more, you kill two birds with one stone and improve security too. VPC creates an isolated environment in the AWS cloud. This way the communication of your infrastructure (in this case – Lambdas connecting to databases) doesn’t leak further into AWS but stays inside VPC.

Memory

Assigned memory had the biggest impact on Lambdas’ performance, hands down.

Whereas memory doesn’t affect cold start, it can significantly reduce the duration. Just keep in mind that increasing Lambda’s memory increases your costs as well. Doubling memory space for your Lambda will speed it up NEARLY twice (nearly being the keyword here).

After all, if doubling the memory increased costs EXACTLY twice, there would be no overall difference in costs and we would always assign more memory to Lambdas for the speed boost.

That is why, we always think about costs first and the execution time second. We notice that using smaller Lambdas is more profitable. It will be imperceptible for singular invocations but you will see all the difference when you start scaling.

Webpack

The Webpack minimize option is also a real star here. It:

- shortens cold start by 80 – 120 milliseconds,

- slightly reduces Lambda duration,

- decreases bundle size by nearly half.

This can truly be a game changer for serverless applications based on Lambdas.

Thank you for bearing with me on this rollercoaster of numbers. There are lots of factors to take into consideration when designing an app – no matter if it’s a brand-new product, a new feature added to an already-existing solution, or active maintenance.

Lambdas are awesome, and you will achieve amazing results using them, ONLY IF you remember to adjust them to your individual use case, expected traffic with peaks and lows, available budget, and so on.

Our teams have already cut down customer's cloud bills from $30,000 to only $2,000 per month.

That’s over 90% savings for the business! If your cloud costs give you headaches, book free consultations with our specialists – let’s talk savings with no strings attached.