24 February 2025

“We're shifting from software engineering to product engineering” – Dimitris Kountanis about AI-powered software development tools

For Dimitris Kountanis, adopting AI is a no-brainer – the efficiency gains are just too big to ignore. However, he also stresses that it’s not easy to get AI to do exactly what a developer wants it to do. He also highlights a rarely talked-about side effect of coding with AI: the opportunity for software engineers to transition to product development.

The CTO vs Status Quo series studies how CTOs challenge the current state of affairs at their company to push it toward a new height … or to save it from doom.

“Great coders who understand prompting can probably do 10x the velocity of someone who’s just learning to use it.”

You won’t get a working Facebook clone out of AI with a single prompt – or many prompts, for that matter.

But for open-minded software developers who are willing to learn, AI can enrich their careers in astonishing ways.

We discussed this topic with Dimitris Kountanis, the Head of Frontend at Native Teams. He shared his experience-based perspective on:

- how AI allows developers to generate more value for the end-user,

- why successful tech teams are shifting their focus from software engineering to product development,

- what is the secret to boosting creativity and velocity for developers,

- where to get real-time updates on everything going on with AI.

About Dimitris & Native Teams

Bio

Frontend engineer, fintech enthusiast, and Head of Frontend at Native Teams. He graduated college in 2019 with a major in Software and Data Analysis Technologies while already working as a Frontend Developer and joined Native Teams as a Founding Engineer a year later.

Expertise

Frontend development, product development, leadership

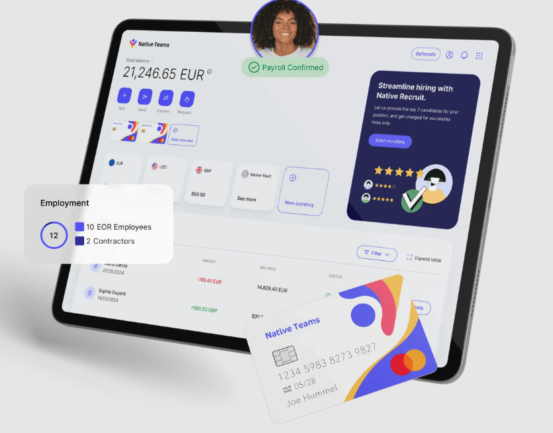

Native Teams

Provider of a platform that helps companies of all sizes hire, pay, and manage their teams across 80+ countries without the need to open local entities. Trusted by 70,000+ clients around the world, the platform cuts administrative time by 90% and reduces costs by up to 3x.

Native Teams’ vision

Piotr Urbas: Hi Dimitris, thanks for joining us! Congratulations on an excellent year at Native Teams – your team grew to 270+ employees and reached £10M ARR. What do you think made this possible?

Dimitris Kountanis: Hello, Piotr! To be honest, things are moving so fast that I still haven’t fully processed these milestones.

At the beginning of 2024, we were about 150 people, so our headcount almost doubled in just one year.

We had a great foundational team that was able to build fast, a smart marketing approach, and a really good market positioning. Starting in Balkan countries and expanding to other places later on turned out to be a great decision.

How important was the role of the frontend team in reaching these milestones?

Our frontend team is split. One part works on marketing, the other on our application. Although they complement each other, the everyday job demands are totally different.

The primary focus is marketing – that’s where the frontend team has the strongest impact on the bottom line. It’s about creating content, building a blog, creating landing pages, and creating all the brochures for selling.

The application side’s goal is to make an interface that works well and complements the backend.

AI enters the stage

I know that you’re passionate about AI. What tools do you and your developers use?

We integrated AI tools into our standard workflow in November 2024. The reason is simple: it’s impossible to code as fast as we want without AI. Plus, the insights and guidance it provides are really helpful.

It began with me encouraging everyone to download Cursor. I told all frontend engineers to use the free trial for a week, see what happens, and report back to me in a week.

Everybody immediately got better and faster. Every task went smoother. At that point, it became obvious that you couldn’t ignore AI tools for coding. It just didn’t make sense to work without them anymore.

We switched to GitHub Copilot for a moment, but now we’re fully migrating to Cursor because it seems to be faster.

I’m not a software engineer, but I recently built a Swift app with Cursor and ChatGPT, so I fully agree that these tools are helpful.

Did you calculate how much faster your team has been coding with AI?

I haven’t crunched the numbers yet, but it feels like we’ve at least doubled our velocity thanks to AI tools. Something that used to take a whole day to build, I can now create in an hour – including the Asana task, Figma file, and all other parts of the process.

With the right setup and context, Cursor can finish the majority of a task with a single prompt. All that’s left is to refine it manually and make sure it works as intended. We have a design system in place and Storybook integration, so developers can pick the building blocks and create exactly what they need on the frontend.

When AI eliminates so much manual work, engineers can focus on logic and be more creative. Would you agree?

Definitely. Used correctly, AI becomes a force multiplier. With our internal API documentation, we’d have to read it and connect the dots ourselves to build functions. Now, we just feed our docs to Cursor and generate the calls we need. We just have to understand the flow and inner workings of the code. AI allows us to focus more on the actual user experience.

I keep telling my team that we’re shifting from software engineering to product engineering. We can finally focus on customer problems and user experience instead of spending our days figuring out how to wrangle an API.

I’m starting to think that if you’re smart enough, you can build anything you want with AI. But let’s not get carried away – my next question is about drawbacks.

For example, some people say that AI limits creativity. Have you seen this happen among frontend devs?

I think it boosts creativity. It takes away most of the boring stuff, the mundane tasks that nobody ever really enjoyed. You have more time to think about the actual page or view you’re building, and crucial issues like its performance and UX.

AI brings you closer to your customers. You can focus on what really matters. There’s less thinking about code and more thinking about the product and the user. Product work is more creative than software engineering.

Let me give you one interesting example: one of our new features includes a big page for bulk invitations.

Bulk actions are always a struggle for the frontend because you need to do a lot of stuff in a really small window. Imagine uploading a spreadsheet with a thousand rows. On every single row, you have empty fields that you may need to pop up an error for. But you can’t just drown the user in a thousand errors at once – that would be terrible UX. What to do?

To solve this, you need to get creative and find a smart way to make it work for the user. With AI, you can provide a nice template for filling in the data or use AI to fill in the data for them.

The AI toolset

Let’s dig deeper into the actual AI tools. How do you use them responsibly? How much hand-holding is involved in coding with AI?

The manual part mostly involves overseeing the AI’s work and testing the code at the end. However, I would never use the agent mode in our codebase. It basically means you let the AI do whatever it wants. I don’t recommend using it.

You have to make sure that AI knows what you’re building, what the end result should look like, what your code and formatting standards are, and even how you want it to reply to your prompts.

The most important tip I can give to people who want to use AI coding tools responsibly is to always set rules for AI. You have to give it restraints. Our process for coding with AI is basically this: write a small prompt, discuss the output, and repeat.

At the end of the day, it’s still your job to understand what you build.

That makes a lot of sense. What about additional tools for error detection and review, like CodeRabbit? Can you rely on them too much?

We don’t use those yet. UI makes up the majority of frontend tasks. AI is not yet sophisticated enough to understand how and why you want to style your layout.

But AI can understand the API or any flows on that side of things, which is why I’d like to work with an AI assistant for merge request creation. I believe it could help us stick to best practices. Rather than deciding to merge code or not, it could perhaps provide a summary of the request with any issues that require looking into.

What about code optimization? This instinctively feels like the safest area for using AI tools in the long term. Would you agree with that?

Before you optimize anything, you have to first figure out what exactly is suboptimal in your code. With AI, you can do performance profiling faster.

Debugging manually is time-consuming. Now, you can just ask AI to do profiling and give you all the data you need. You come in at the end and focus on how to optimize a specific loop or fix a defective function.

You can’t optimize something you can’t see. AI-powered debugging and performance profiling give you that context. However, you need serious prompting skills to break your code into small parts and find what needs fixing.

The more context you give, the better answer you’ll get, correct?

The best advice I keep hearing from AI power users is to avoid big tasks. Whatever job you need to do, break it down into small, simple steps.

When you give AI too much context, it cannot consider everything, and the output will disappoint you.

I noticed the exact same thing when working with Cursor. I started out thinking that I could one-shot a whole app with a super long prompt. I quickly realized that it works much better with small tasks.

Exactly. You can’t just open Cursor and say, “Build Facebook.”

I’m curious about the logistics of switching to Cursor as a team. How do you enforce best practices for AI usage?

My approach is to set rules for Cursor and carefully explain them to the devs.

We discuss those rules internally and make sure everyone understands why they’re necessary – why prompt X works, why add specific configs, why specify the packages we’re using, and so on. This helps avoid incorrect replies, messy code, and unacceptable mistakes like changing the programming language.

I’ve seen people complain that AI gives them Python code for a JavaScript codebase, and it doesn’t surprise me. If they haven’t specified the rules and the context, the AI is probably just going for the easiest solution possible.

Rules are a priority. If I define rules for Cursor about how to call APIs and add a constraint like “we use Tailwind,” the team will have an easier time working with AI and understanding its outputs.

Is AI already an essential part of the developer’s toolkit?

Yes. I’m very confident about that. If you still don’t use a code editor integrated with AI, you simply can’t compete with those that do. Once you begin using these tools, the immediate difference is night and day – great coders who understand prompting can probably do 10x the velocity of someone who’s just learning to use it.

Some companies already refuse to hire devs who don’t use AI. Working in the industry and knowing nothing about it is a bit like being illiterate. You can’t ignore it, even if you’re a senior with years of experience. A smart junior who’s a power user of AI can already do what you can, probably even more.

I think the only businesses that still struggle to adopt AI code editors are those with the most sensitive data. Once they figure out how to use AI tools without the need to connect with third-party servers, that constraint will go away.

AI & remote work

Let’s switch gears for a moment and talk about remote work. I understand that Native Teams loves it.

Do you think it’s more difficult to enforce AI usage standards on remote teams?

Remote work is difficult to manage at scale. The bigger you grow, the more layers there are in your organization. The structure of a 30-person company is much different than that of a 300-person one, and the flow of information gets very complicated.

You have to consider different time zones and work hours. This calls for a culture where information can spread around freely and for processes that ensure that important insights always reach the right person.

You have to enforce some standards in different ways. I can give you an example that’s not related to AI – we’ve made it mandatory to use Prettier with Husky for pre-commit hooks. Everything has to be prettified before it’s pushed to our codebase. Our setup is such that any commit that doesn’t comply with that will be blocked automatically.

But when it comes to AI, I think it’s fairly simple: the standard we require is to use it as much as possible and always check if everything is fine before pushing it. That’s it.

General advice

What’s your advice to a developer who’s just starting to implement AI tools, perhaps just trying out Copilot or Cursor? How should they approach these tools to actually become better developers?

AI is evolving too fast to really keep up with it right now. Best practices change before you can fully understand them. You need to figure out a way to stay on top of it all.

Apart from that, you need a foundational understanding of what you’re using – how prompting works, how agents work, etc. Then, you also need to keep testing things, like going through different models and seeing how they reply. Understand why some people prefer Anthropic’s Sonnet over OpenAI’s GPT4o and why Anthropic’s models are better for code than OpenAI’s for complex reasoning.

My next piece of advice is to use AI to transition to product engineering, even if you’re a backend developer. AI gives you the extra time you need to finally focus on the end user and not just the intricacies of software engineering.

And what would you advise managers who want to foster a pro-AI culture?

If you know Peter Thiel’s classic “Zero to One,” it’s easy to see that we’re in the midst of a zero-to-one moment – meaning that the industry will never be the same as it was before AI.

As someone who almost missed its departure, I can confidently say that you don’t want to be late for the AI train. If AI wasn’t a big part of our system right now, I might have ignored it until next year or so – and I believe that would have been too late to catch up.

As a manager, you play an important part in keeping your developers in the loop. Provide time and resources for your team to try things out, empower yourself to do the same, and watch what happens.

If you get an idea, do a tiny proof of concept. It might not work out, but you will definitely learn a lot in the process. And if it does work out, it will motivate the whole team to keep trying even more.

I think that’s the biggest thing I’d tell my younger self – test more things, keep trying new tech, play around with it, and stop being so afraid.

Resources

It’s time for our standard final question: what should managers read, watch, or listen to if they want to learn more about AI?

Hot take alert: I don’t like reading engineering books or listening to engineering podcasts. I just scroll Twitter or X.

All the experts and main AI players are there like Guillermo Rauch or Sam Altman, announcing stuff and discussing the latest developments. It’s the fastest, most reliable way to stay up to date.

Sure, books and podcasts have lots of value, especially when it comes to learning fundamentals. However, they’re not updated in real time – Twitter is. You see announcements as soon as they’re posted.

Plus, you can find a lot of stuff to copy-paste and immediately try out. Somebody who’s already mastered Cursor might post a directory with rules that you can test without having to come up with your own from scratch.

Consider this: we had a 4-hour-long AI workshop at Native Teams sometime around November. The first three hours consisted of more than 2,000 slides, all with information from Twitter announcements. Anyone who wasn’t using Twitter had to process all of it right there and then. Meanwhile, I barely had to pay attention because I saw almost all of it while it was happening.

What’s next? 3 tips for CTOs to increase velocity with AI

Dimitris believes that, when used correctly, AI can be a force multiplier, a creativity boost, and a way for engineers to spend more time adding value for the user instead of typing out code. To achieve this development process improvement, he recommends the following:

- make sure AI knows exactly what you’re building – what the end result should look like, your code standards, formatting, and even how you want it to reply to your prompt,

- break down big jobs into small tasks – you can describe them in short prompts and get correct outputs of good code quality out of AI more easily,

- stay open-minded – be willing to try out new tools quickly instead of letting yourself be slowed down by anxiety.

Use Dimitris’ advice to increase the velocity of your software development process with AI, but remember that it’s still your team’s job to understand what they’re building.

Do you want to learn more about how Native Teams helps companies hire and manager their global team?

Check out the website for more information and pricing.