02 October 2018

Making the world beautiful with ARCore Android

If you’re unsatisfied with the world as it is, you may feel tempted to enhance it. To make it more beautiful. And, in 2018, Google and their ARCore Android can help you achieve that. ARCore is a development platform for building augmented reality apps. In this article, I’ll walk you through its functionalities and how the technology itself can enrich your everyday life. I’ll also show you, step by step, how to make your own ARCore apps.

You could say that augmented reality (AR) allows you to create your own world using your mobile phone.

In technical terms, AR apps make it possible to enhance your real-world environment with computer-generated 3D models, images, and videos. When you look at something through your phone’s camera, you see/hear all the virtual additions as a part of the environment. Provided, of course, that you install a required AR app.

Why is AR one of the most popular technologies of 2018? It’s probably because there are so many ways you can use it – the only limit is your imagination. AR has the potential to impact and enrich all aspects of our lives, also mobile development.

What are ARCore by Google and ARCore app?

Since my article focuses on ARCore, let’s begin with a short trip into the past.

On February 23, 2018, Google released ARCore ver. 1.0.0. Since then, Android has been supporting augmented reality. In the first version, Google implemented basic functionalities, like tracking a phone’s position in the real world, detecting the size and location of environmental planes, as well as understanding the environment’s current lighting conditions. In addition to these features, ARCore 1.0 introduced oriented points to place virtual objects on non-horizontal or non-planar textured surfaces.

On April 17, 2018, Google released ARCore Android ver. 1.1.0, which included:

- API for synchronously (at the same frame) acquiring the image from a camera frame (without manually copying the image buffer from the GPU to the CPU);

- API for getting color correction information for the images captured by the camera.

On May 8, we got a huge release, in which Google added:

- cloud Anchors API that enables developers to build shared Google ARCore experiences across iOS and Android. This is done by allowing anchors created on one device to be transformed into cloud anchors and shared with users on other devices,

- augmented Images API that enables ARCore apps to detect and track images,

- vertical plane detection, which means that ARCore started detecting both horizontal and vertical planes.

At the time of writing this post, Google has already released ARCore Android ver. 1.3.0, which supports:

- New method on Frame:

- getAndroidSensorPose() returns the world-space pose of the Android sensor frame.

- New methods on Camera:

- getImageIntrinsics() returns the camera image’s camera intrinsics,

- getTextureIntrinsics() returns the camera texture’s camera intrinsics.

- New class CameraIntrinsics provides the unrotated physical characteristics of a camera, which consists of its focal length, principal point, and image dimensions.

- New method on Session:

- getConfig() returns the config set by Session.configure().

ARCore Android in action

In the previous section, I wrote about the functionalities of Google ARCore. Now, let’s leave the technical details behind for a while and appreciate just how visually impressive augmented reality is. Are you ready to see how you can incorporate it into your life?

Imagine it’s beautiful outside and you’re going on a bike trip to a brand-new place. You’ve never been there before, so, of course, you’ll need a guide. With augmented reality apps, your phone can serve as an excellent guide, showing you where to go based on your location. Here’s one example.

Or let’s say you’ve just moved to a big city, but you don’t have any friends there yet, so you don’t know how to get to all the interesting places. If you have a compatible device, it’ll locate all the points of interest nearby and show them to you real-time. All you need to do is point your phone at the street.

ARCore Android can also assist you when you want to decorate your new place or buy a new piece of furniture that doesn’t clash with the rest. Just point your phone where you want to place the new stuff.

There are a lot of ideas and concepts on how to use AR. In this movie there are some examples:

Some mobile development tutorials for your consideration:

How to make an ARCore app?

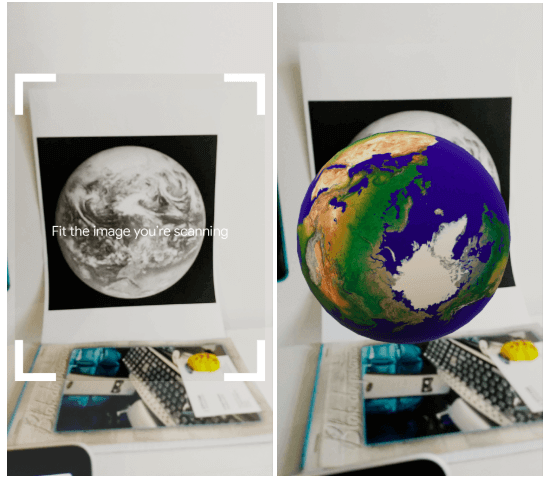

In the remaining part of this article, I’ll teach you how to create a simple ARCore app with markers and videos. In ARCore, markers are 2D images in the user’s environment, such as posters or product packaging. In your app, you will use markers to show a 3D model of the Earth and a short video played after the user clicks on the model. This will be the first step to creating something bigger later on, e.g. your own guide for a museum. Let’s get to work!

First of all, you need one of ARCore-supported devices. Also, you should start with the environment configuration. Doing it requires basic knowledge of Android Studio and Kotlin. The next step is configuring your build.gradle. Remember that the min sdk version for ARCore is 24. In your build.gradle, add dependencies:

In your AndroidManifest.xml, add permissions for the camera:

When you run your application, you have to make sure that you installed it on an ARCore-supported device. There are two ways to do that:

- use ARFragment – you don’t need to write code to check if ARCore Android is installed on your device; ARFragment will do it for you!

- write code that will check all ARCore statuses.

Here’s an example of the latter:

Okay, now that your environment is up and running, you can start building the app. As I mentioned before, it will show you a fraction of ARCore’s power.

I decided to use popular markers (2D images). Inside ARCore Android, they’re called AugmentedImages.

First of all, you need to add images that your app will recognize and show as 3D models on your screen. You also need to install a scene plugin for an easy sceneform asset import. This can be done in:

- id Studio -> Preferences -> Plugins,

- select Browse repositories… -> Search Google Sceneform Tools,

- click Install.

Now you have everything to start importing 3D models into your project.

Time to find some models!

You may start with this website. Just remember that models should be OBJ, FBX, or glTF. For more information about importing 3D models into your ARCore app, check out this site.

Great! You’ve imported models into our app. Now, let’s have some fun! Create an assets folder inside your project, in your app package. You’ll be saving images for markers and videos there. Remember to use only .jpg and .png image files.

With images and videos in the assets folder plus imported models, you can finally start coding. First of all, you need to create a method for loading an image as a marker, from assets into Bitmap:

In my example, I used an image from google samples for ARCore Android, but you can choose any image you want. Now, you need to add a database to store all the images for markers, for recognition purposes. In order to do that, add:

As you can see, you’ve built AugmentedImageDatabase and used the previous method to add an image. After that, it’s time to configure a session:

This method should be invoked in onResume() method.

So, you’ve created methods for importing images from assets as well as adding them to the database and session configuration. The only thing left to implement is showing 3D models after the scan marker. Piece of cake! Start by creating an update method, which you’ll use for updating the displayed model:

The last step is adding this method in the update listener for the surface view:

That’s it! Try scanning the image and you should see a 3D model of the Earth on your screen (or whatever image you chose).

How to add a video in ARCore Android

Your ARCore app now allows you to import images and 3D models, add them to the database, and show a 3D model after scan marker. But one thing is still missing – showing and playing videos. ARCore doesn’t support playing videos in a straightforward way. To implement it, you need to use OpenGL. I used the code from here.

This is exactly what we want. If you create a class from the gist, the only thing left to do is add some code in your MainActivity.class:

Step 1. Extend GLSurfaceView.Renderer

Step 2. In your layout for MainActivity, add:

Step 3. In onCreate method, add:

Step 4. Add code for drawing frame with a video:

Step 5. Add a click listener for your 3D model in the onUpdateFrame method:

That’s it! You can find the full code for MainActivity here. After applying all the previous steps, you should get something like this.

Congratulations! You’ve just created an app that can display a 3D model and, after you click on it, shows you a video! It’s amazing what you can do with ARCore Android.

Big on video? Try out making your own video chat

Creating a more beautiful world with augmented reality is very simple

Google ARCore gives you the freedom to make almost anything you want. Why almost? The technology is still very young – with amazing potential, but without many features we need. Google developers are gradually adding new features and fixing bugs, but there’s a lot to be done. Still, despite all the bugs and missing features, ARCore Android is worth getting familiar with. At least, that’s what I think.

Disclaimer

In my project, I got my info from an article Playing video in ARCore using OpenGL. I’ve also used some code from Google ARCore samples. You can find all the code for this project here.